22. Information Retrieval and Text Ranking#

22.1. Overview of Information Retrieval#

22.1.1. Ad-hoc Retrieval#

Ad-hoc search and retrieval is a classic information retrieval (IR) task consisting of two steps: first, the user specifies his or her information need through a query; second, the information retrieval system fetches documents from a large corpus that are likely to be relevant to the query. Key elements in an ad-hoc retrieval system include

Query, the textual description of information need.

Corpus, a large collection of textual documents to be retrieved.

Relevance is about whether a retrieved document can meet the user’s information need.

There has been a long research and product development history on ad-hoc retrieval. Successful products in ad-hoc retrieval include Google search engine [Fig. 22.2] and Microsoft Bing [Fig. 22.3].

One core component within Ad-hoc retrieval is text ranking. The returned documents from a retrieval system or a search engine are typically in the form of an ordered list of texts. These texts (web pages, academic papers, news, tweets, etc.) are ordered with respect to the relevance to the user’s query, or the user’s information need.

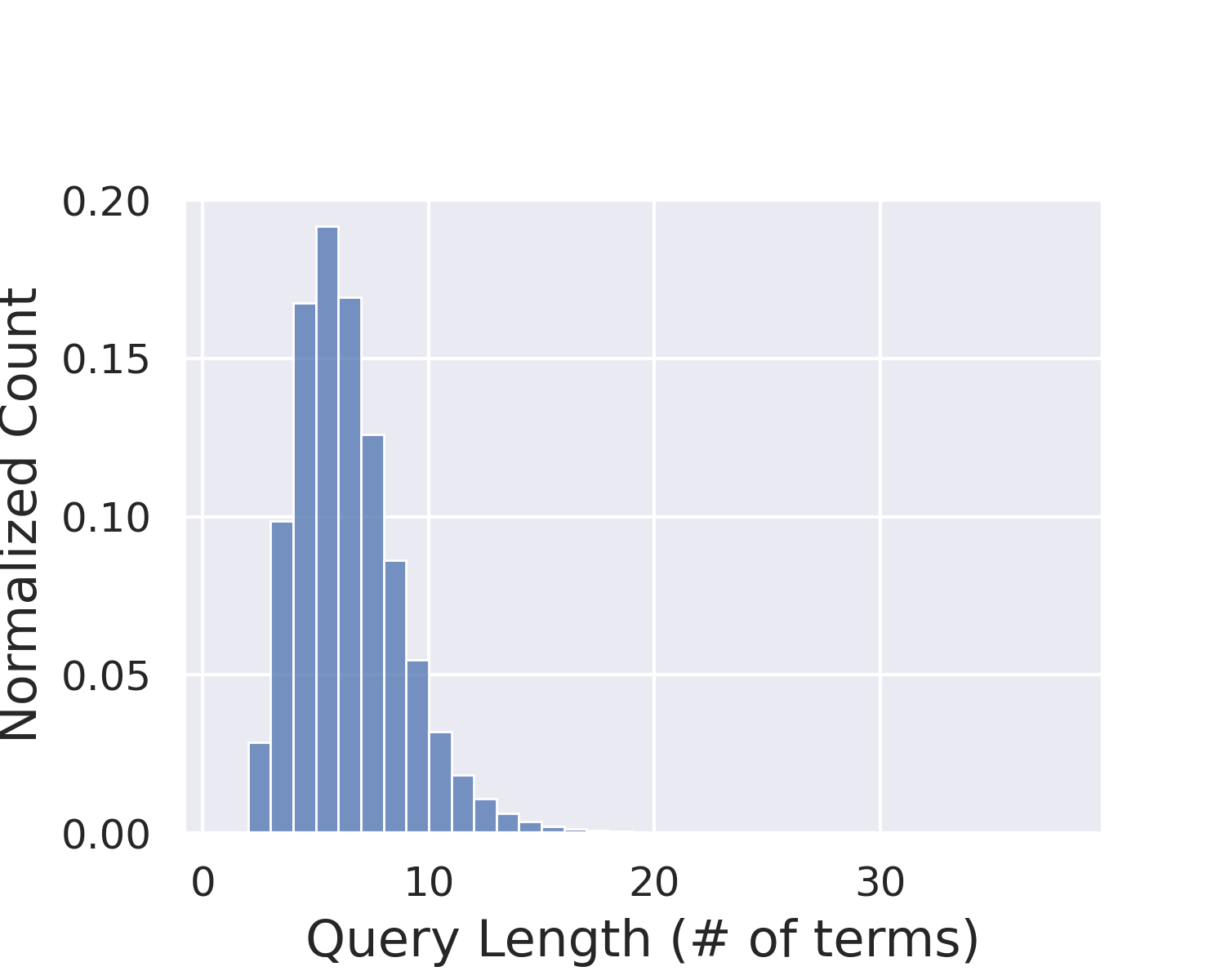

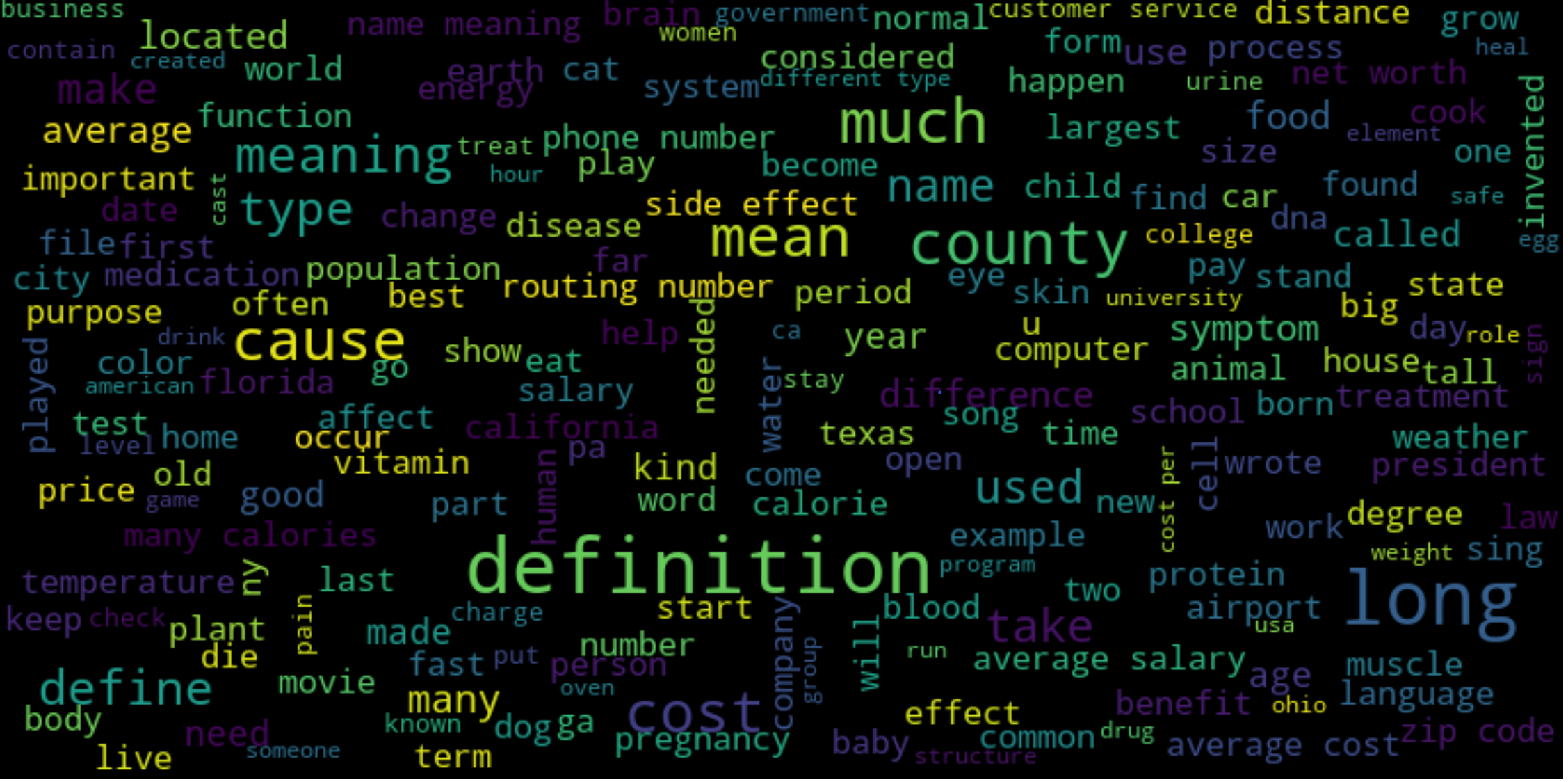

A major characteristic of ad-hoc retrieval is the heterogeneity of the query and the documents [Fig. 22.1]. A user’s query often comes with potentially unclear intent and is usually very short, ranging from a few words to a few sentences. On the other hand, documents are typically from a different set of authors with varying writing styles and have longer text length, ranging from multiple sentences to many paragraphs. Such heterogeneity poses significant challenges for vocabulary match and semantic match for ad-hoc retrieval tasks.

Fig. 22.1 Query length and document length distribution in Ad-hoc retrieval example using MS MARCO dataset.#

There have been decades’ research and engineering efforts on developing ad-hoc retrieval models and system. Traditional IR systems primarily rely on techniques to identify exact term matches between a query and a document and compute final relevance score between various weighting schemes. Such exact matching approach has achieved tremendous success due to scalability and computational efficiency - fetching a handful of relevant document from billions of candidate documents. Unfortunately, exact match often suffers from vocabulary mismatch problem where sentences with similar meaning but in different terms are considered not matched. Recent development of deep neural network approach [HHG+13], particularly Transformer based pre-trained large language models, has made great progress in semantic matching, or inexact match, by incorporating recent success in natural language understanding and generation. Recently, combining exact matching with semantic matching is empowering many IR and search products.

Fig. 22.2 Google search engine.#

Fig. 22.3 Microsoft Bing search engine.#

22.1.2. Open-domain Question Answering#

Another application closely related IR is open-domain question answering (OpenQA), which has found a widespread adoption in products like search engine, intelligent assistant, and automatic customer service. OpenQA is a task to answer factoid questions that humans might ask, using a large collection of documents (e.g., Wikipedia, Web page, or collected document) as the information source. An OpenQA example is like

Q: What is the capital of China?

A: Beijing.

Contrast to Ad-hoc retrieval, instead of simply returning a list of relevant documents, the goal of OpenQA is to identify (or extract) a span of text that directly answers the user’s question. Specifically, for factoid question answering, the OpenQA system primarily focuses on questions that can be answered with short phrases or named entities such as dates, locations, organizations, etc.

A typical modern OpenQA system adopts a two-stage pipeline [CFWB17] [Fig. 22.4]: (1) A document retriever selects a small set of relevant passages that probably contain the answer from a large-scale collection; (2) A document reader extracts the answer from relevant documents returned by the document retriever. Similar to ad-hoc search, relevant documents are required to be not only topically related to but also correctly address the question, which requires more semantics understanding beyond exact term matching features. One widely adopted strategy to improve OpenQA system with large corpus is to use an efficient document (or paragraph) retrieval technique to obtain a few relevant documents, and then use an accurate (yet expensive) reader model to read the retrieved documents and find the answer.

Nowadays many web search engines like Google and Bing have been evolving towards higher intelligence by incorporating OpenQA techniques into their search functionalities.

Fig. 22.4 A typical open-domain architecture where a retriever retrieves passages from information source relevant to the question.#

Compared with ad-hoc retrieval, OpenQA shows reduced heterogeneity between the question and the answer passage/sentence yet add challenges of precisely understanding the question and locating passages that might contain answers. On one hand, the question is usually in natural language, which is longer than keyword queries and less ambiguous in intent. On the other hand, the answer passages/sentences are usually much shorter text spans than documents, leading to more concentrated topics/semantics. Retrieval and reader models need to capture the patterns expected in the answer passage/sentence based on the intent of the question, such as the matching of the context words, the existence of the expected answer type, and so on.

22.1.3. Modern IR Systems#

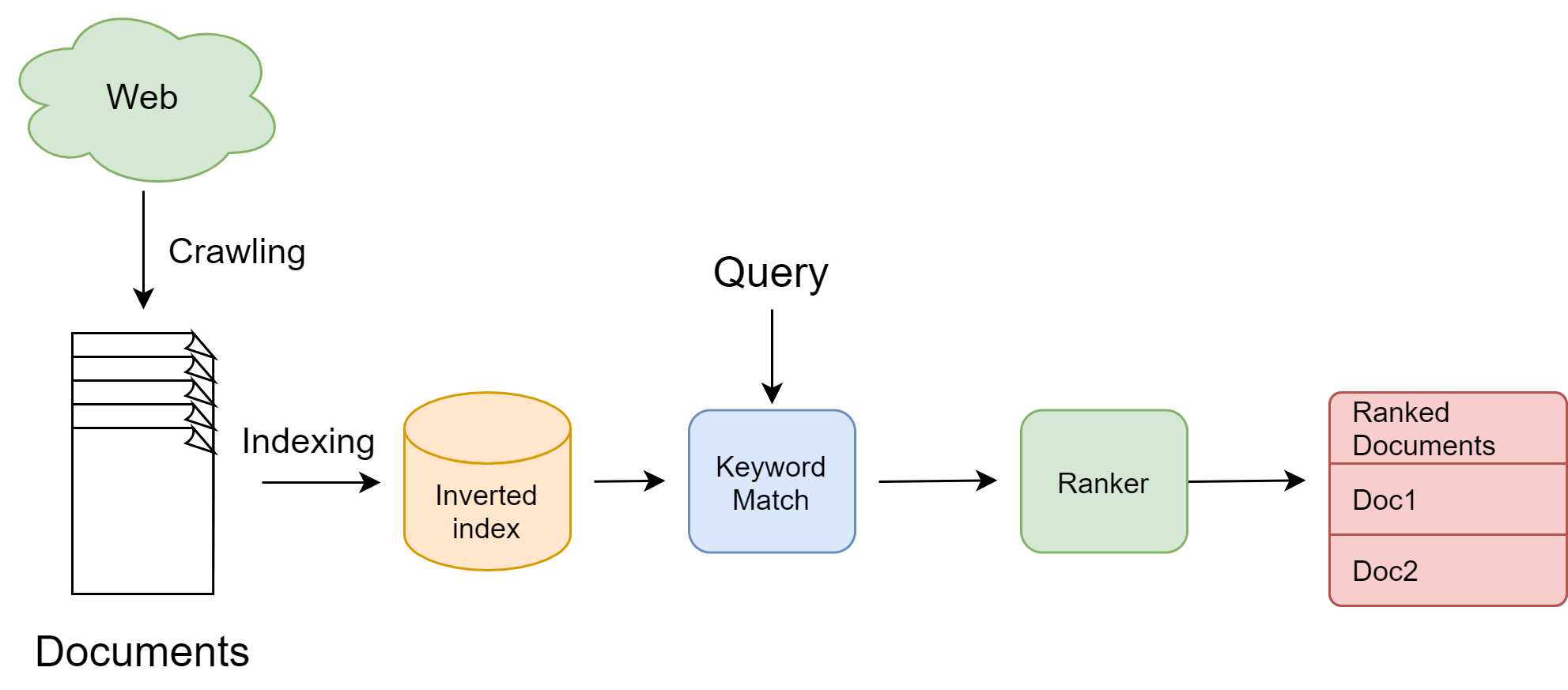

A traditional IR system, or concretely a search engine, operates through several key steps.

The first step is crawling. A web search engines discover and collect web pages by crawling from site to site; Another vertical search engines such as e-commerce search engines collect their product information from product description and other product meta data. The second step is indexing, which creates an inverted index that maps key words to document ids. The last step is searching. Searching is a process that accepts a text query as input, looks up relevant documents from the inverted index, ranks documents, and returns a list of results, ranked by their relevance to the query.

Fig. 22.5 Key steps in a traditional IR system.#

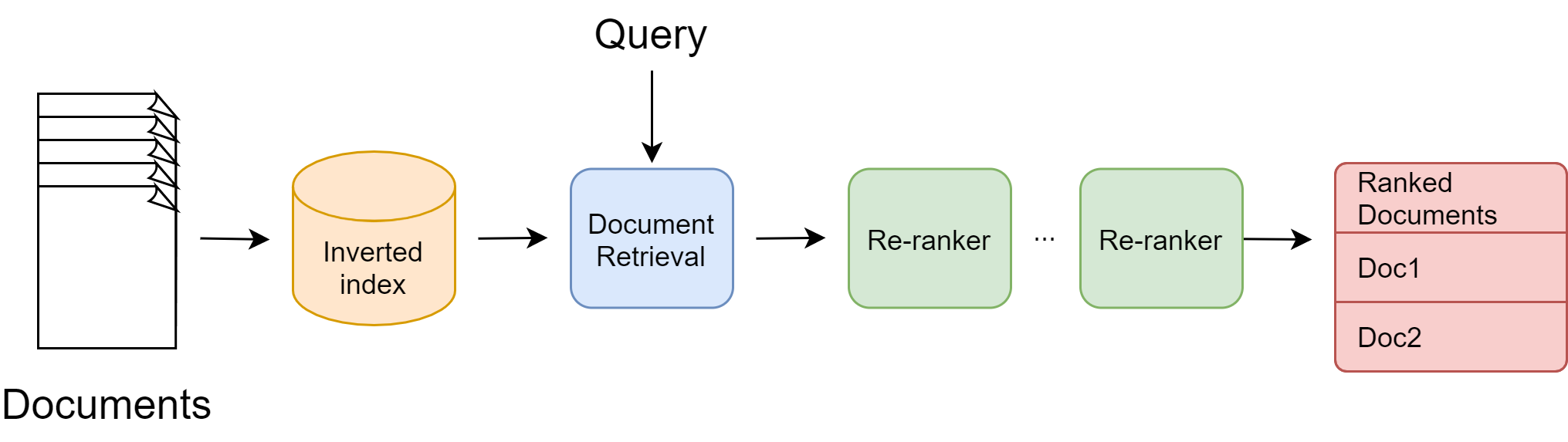

The rapid progress of deep neural network learning [GBCB16] and their profound impact on natural language processing has also reshaped IR systems and brought IR into a deep learning age. Deep neural networks (e.g., Transformers [DCLT18]) have proved their unparalleled capability in semantic understanding over traditional IR margin yet they suffer from high computational cost and latency. This motivates the development of multi-stage retrieval and ranking IR system [Fig. 22.6] in order to better balance trade-offs between effectiveness (i.e., quality and accuracy of final results) and efficiency (i.e., computational cost and latency).

In this multi-stage pipeline, early stage models consists of simpler but more efficient models to reduce the candidate documents from billions to thousands; later stage models consists of complex models to perform accurate ranking for a handful of documents coming from early stages.

Fig. 22.6 The multi-stage architecture of modern information retrieval systems.#

In modern search engine, traditional IR models, which is based on term matching, serve as good candidates for early stage model due to their efficiency. The core idea of the traditional approach is to count repetitions of query terms in the document. Large counts indicates higher relevance. Different transformation and weighting schemes for those counts lead to a variety of possible TF-IDF ranking features.

Later stage models are primarily deep learning model. Deep learning models in IR not only provide powerful representations of textual data that capture word and document semantics, allowing a machine to better under queries and documents, but also open doors to multi-modal (e.g., image, video) and multilingual search, ultimately paving the way for developing intelligent search engines that deliver rich contents to users.

Remark 22.1 (Why we need a semantic understanding model)

For web-scale search engines like Google or Bing, typically a very small set of popular pages that can answer a good proportion of queries.[MNCC16] The vast majority of queries contain common terms. It is possible to use term matching between key words in URL or title and query terms for text ranking; It is also possible to simply memorize the user clicks between common queries between their ideal URLs. For example, a query CNN is always matched to the CNN landing page. These simple methods clearly do not require a semantic understanding on the query and the document content.

However, for new or tail queries as well as new and tail document, a semantic understanding on queries and documents is crucial. For these cases, there is a lack of click evidence between the queries and the documents, and therefore a model that capture the semantic-level relationship between the query and the document content is necessary for text ranking.

22.1.4. Challenges And Opportunities In IR Systems#

22.1.4.1. Query Understanding And Rewriting#

A user’s query does not always have crystal clear description on the information need of the user. Rather, it often comes with potentially misspellings and unclear intent, and is usually very short, ranging from a few words to a few sentences [ch:neural-network-and-deep-learning:ApplicationNLP_IRSearch:tab:example_queries_MSMARCO]. There are several challenges to understand the user’s query.

Users might use vastly different query representations even though they have the same search intent. For example, suppose users like to get information about distance between Sun and Earth. Common used queries could be

how far earth sun

distance from sun to earth

distance of earth from sun

how far earth is from the sun

We can see that some of them are just key words rather than a full sentence and some of them might not have the completely correct grammar.

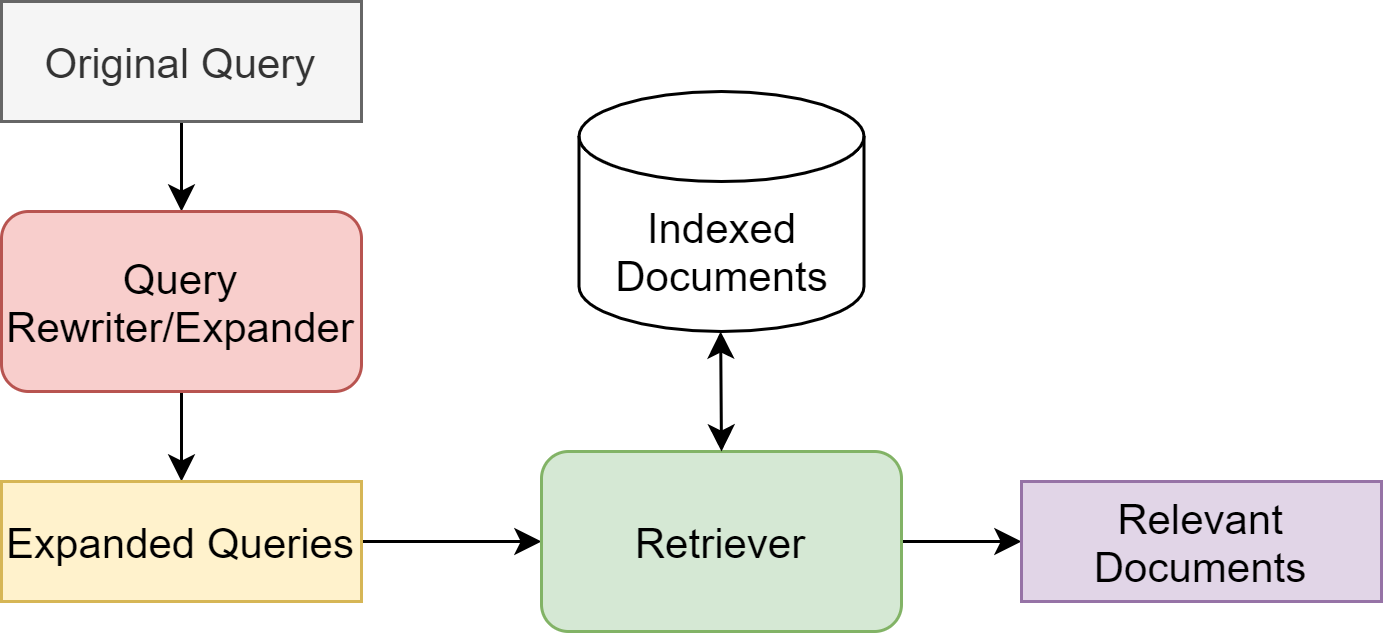

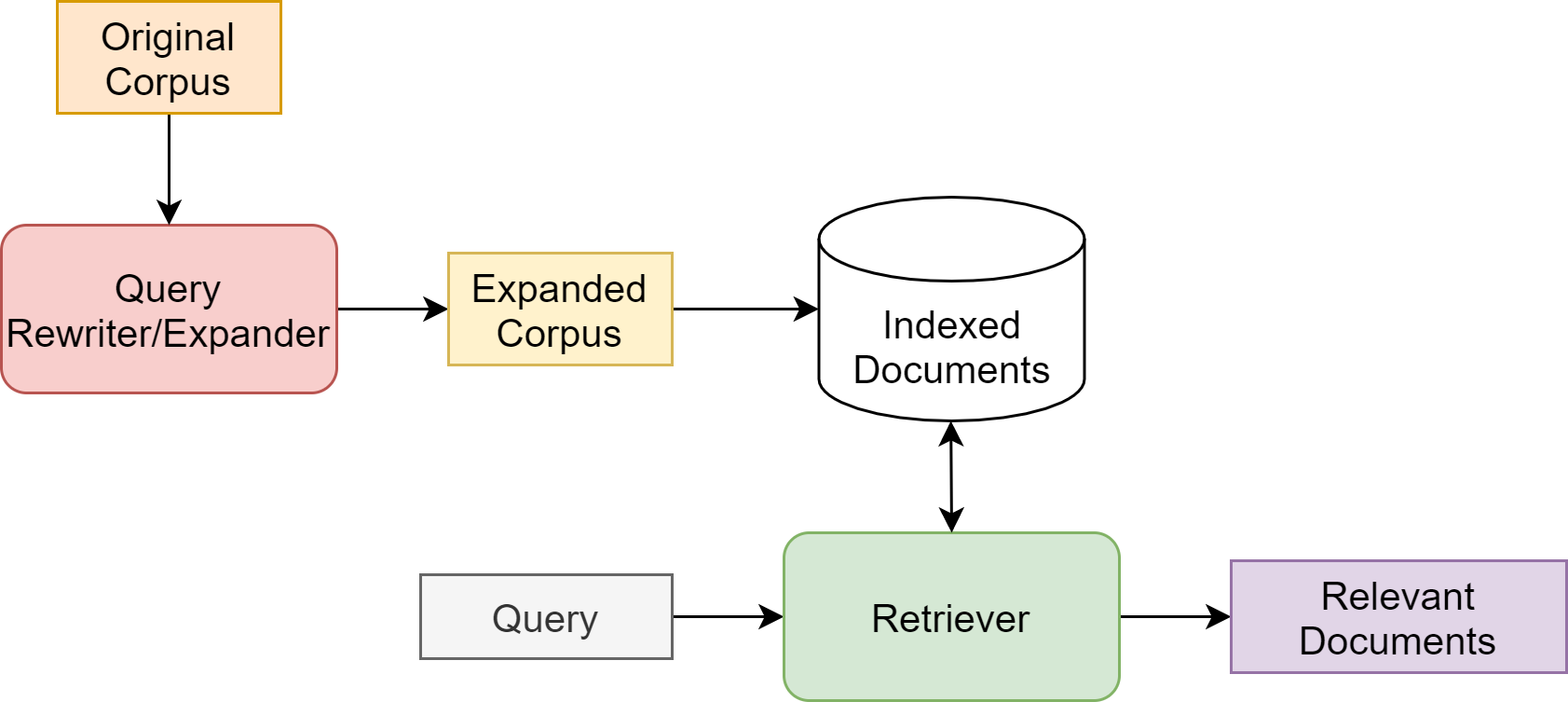

There are also more challenging scenarios where queries are often poorly worded and far from describing the searcher’s actual information needs. Typically, we employ a query rewriting component to expand the search and increase recall, i.e., to retrieve a larger set of results, with the hope that relevant results will not be missed. Such query rewriting component has multiple sub-components which are summarized below.

Spell checker Spell checking queries is an important and necessary feature of modern search. Spell checking enhance user experience by fixing basic spelling mistakes like itlian restaurat to italian restaurant.

Query expansion Query expansion improves search result retrieval by adding or substituting terms to the user’s query. Essentially, these additional terms aim to minimize the mismatch between the searcher’s query and available documents. For example, the query italian restaurant, we can expand restaurant to food or cuisine to search all potential candidates.

Query relaxation The reverse of query expansion is query relaxation, which expand the search scope when the user’s query is too restrictive. For example, a search for good Italian restaurant can be relaxed to italian restaurant.

Query intent understanding This subcomponent aims to figure out the main intent behind the query, e.g., the query coffee shop most likely has a local intent (an interest in nearby places) and the query earthquake may have a news intent. Later on, this intent will help in selecting and ranking the best documents for the query.

Given a rewritten query, It is also important to correctly weigh specific terms in a query such that we can narrow down the search scope. Consider the query NBA news, a relevant document is expected to be about NBA and news but have more focus on NBA. There are traditional rule-based approach to determine the term importance as well as recent data-driven approach that determines the term importance based on sophisticated natural language and context understanding.

To improve relevance ranking, it is often necessary to incorporate additional context information (e.g., time, location, etc.) into the user’s query. For example, when a user types in a query coffee shop, retrieve coffee shops by ascending distance to the user’s location can generally improve relevance ranking. Still, there are challenges on deciding for which type of query we need to incorporate the context information.

Fig. 22.7 Word cloud visualization for common query words using MS MARCO data.#

22.1.4.2. Exact Match And Semantic Match#

Traditional IR systems retrieve documents mainly by matching keywords in documents with those in search queries. While in many cases exact term match naturally ensure semantic match, there are cases, exact term matching can be insufficient.

The first reason is due to the polysemy of words. That is, a word can mean different things depending on context. The meaning of book is different in text book and book a hotel room. Short queries particularly suffer from Polysemy because they are often devoid of context.

The second reason is due to the fact that a concept is often expressed using different vocabularies and language styles in documents and queries. As a result, such a model would have difficulty in retrieving documents that have none of the query terms but turn out to be relevant.

Modern neural-based IR model enable semantic retrieval by learning latent representations of text from data and enable document retrieval based on semantic similarity.

Query |

“Weather Related Fatalities” |

|---|---|

Information Need |

A relevant document will report a type of weather event which has directly caused at least one fatality in some location. |

Lexical Document |

“.. Oklahoma and South Carolina each recorded three fatalities. There were two each in Arizona, Kentucky, Missouri, Utah and Virginia. Recording a single lightning death for the year were Washington, D.C.; Kansas, Montana, North Dakota, ..” |

Semantic Document |

.. Closed roads and icy highways took their toll as at least one motorist was killed in a 17-vehicle pileup in Idaho, a tour bus crashed on an icy stretch of Sierra Nevada interstate and 100-car string of accidents occurred near Seattle … |

An IR system solely rely on semantic retrieval is vulnerable to queries that have rare words. This is because rare words are infrequent or even never appear in the training data and learned representation associated with rare words query might be poor due to the nature of data-driven learning. On the other hand, exact matching approach are robust to rare words and can precisely retrieve documents containing rare terms.

Another drawback of semantic retrieval is high false positives: retrieving documents that are only loosely related to the query.

Nowadays, much efforts have been directed to achieve a strong and intelligent modern IR system by combining exact match and semantic match approaches in different ways. Examples include joint optimization of hybrid exact match and semantic match systems, enhancing exact match via semantic based query and document expansion, etc.

22.1.4.3. Robustness To Document Variations#

In response to users’ queries and questions, IR systems needs to search a large collection of text documents, typically at the billion-level scale, to retrieve relevant ones. These documents are comprised of mostly unstructured natural language text, as compared to structured data like tables or forms.

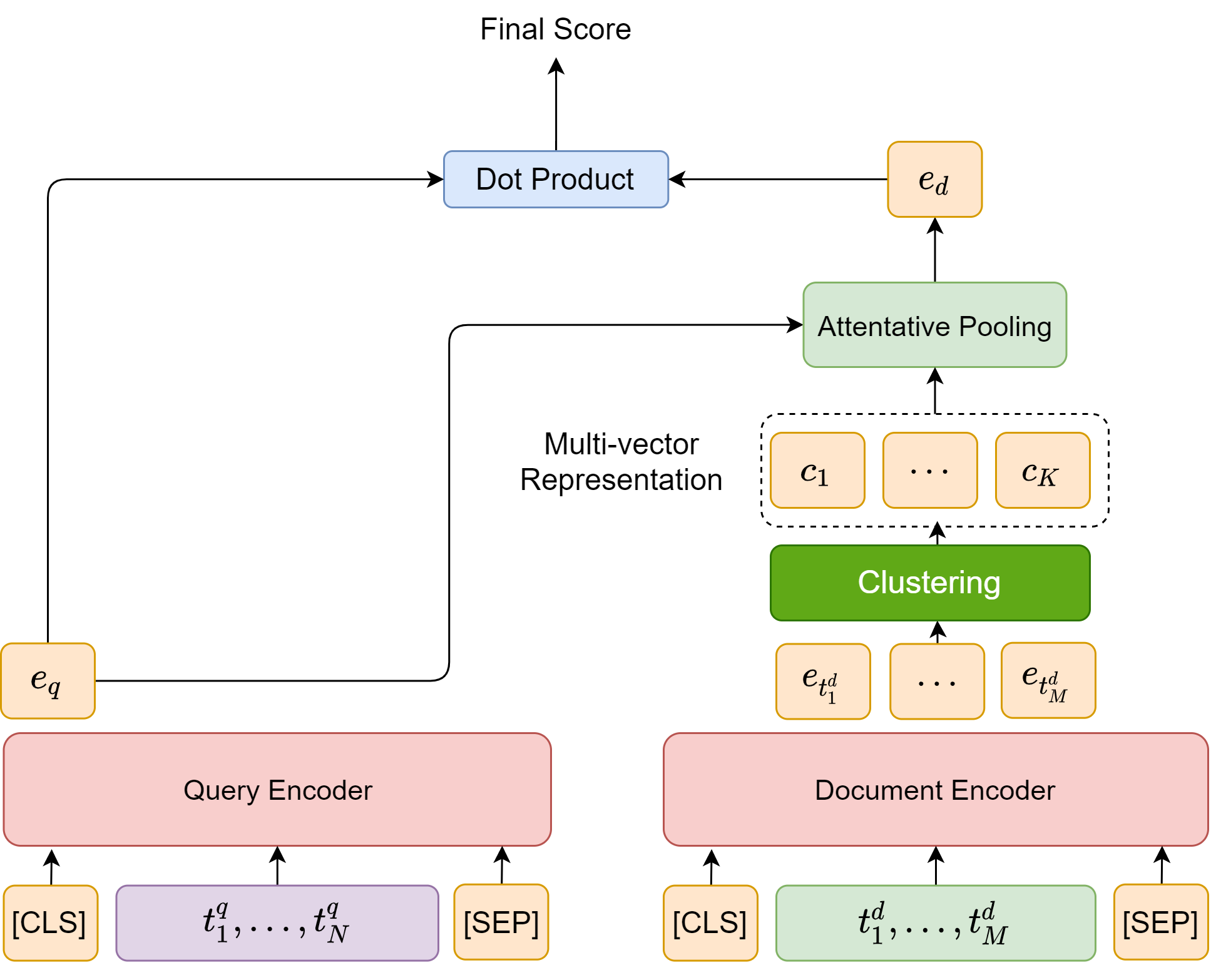

Documents can vary in length, ranging from sentences (e.g., searching for similar questions in a community question answering application like Quora) to lengthy web pages. A long document might like a short document, covering a similar scope but with more words, which is also known as the Verbosity hypothesis. On the other hand, a long document might consist of a number of unrelated short documents concatenated together, which is known as Scope hypothesis. The wide variation of document forms lead to different strategies. For example, following the Verbosity hypothesis a long document is represented by a single feature vector. Following the Scope hypothesis, one can break a long document into several semantically distinctive parts and represent each of them as separate feature vectors. We can consider each part as the unit of retrieval or rank the long document by aggregating evidence across its constituent parts.

For full-text scientific articles, we might choose to only consider article titles and abstracts, and ignoring most of the numerical results and analysis.

There are also challenges on breaking a long document into semantically distinctive parts and encode each part into meaningful representation. Recent neural network methods extract semantic parts by clustering tokens in the hidden space and represent documents by multi-vector representations[HSLW19, LETC21, TSJ+21].

22.1.4.4. Computational Efficiency#

IR product such as search engines often serve a huge pool of user and need to handle tremendous volume of search requests during peak time (e.g., when there is breaking news events). To provide the best user experience, computational efficiency of IR models often directly affect user perceived latency. A long standing challenge is to achieve high accuracy/relevance in fetched documents yet to maintain a low latency. While traditional IR methods based on exact term match has excellent computational efficiency and scalability, it suffers from low accuracy due to the vocabulary and semantic mismatch problems. Recent progress in deep learning and natural language process are highlighted by complex transformer-based model [DCLT18] that achieved accuracy gain over traditional IR by a large margin yet experienced high latency issues. There are numerous ongoing studies (e.g., [GDC21, MC19, MDC17]) aiming to bring the benefits from the two sides via hybrid modeling methodology.

To alleviate the computational bottleneck from deep learning based dense retrieval, state-of-the-art search engines also adopts a multi-stage retrieval pipeline system: an efficient first-stage retriever uses a query to fetch a set of documents from the entire document collection, and subsequently one or more more powerful retriever to refine the results.

22.2. Text Ranking Evaluation Metrics#

Consider a large corpus containing \(N\) documents \(D=\{d_1,...,d_N\}\). Given a query \(q\), suppose the retriever and its subsequent re-ranker (if there is) ultimately produce an ordered list of \(k\) relevant document \(R_q = \{d_{i_1},...,d_{i_k}\}\), where documents \(d_{i_1},...,d_{i_k} \) are ranked by some relevance measure with respect to the query.

In the following, we discuss several commonly used metrics to evaluate text ranking quality and IR system.

22.2.1. Precision And Recall#

Precision and recall are metrics concerns the fraction of relevant documents retrieved for a query \(q\), but they are not concerned with the ranking order. Specifically, precision computes the fraction of relevant documents with respect to the total number of documents in the retrieved set \(R_{q}\), where \(R_{q}\) have \(K\) documents; Recall computes the fraction of relevant documents with respect to the total number of relevant documents in the corpus \(D\). More formally, we have

where \(\operatorname{rel}_{q}(d)\) is a binary indicator function indicating if document \(d\) is relevant to \(q\). Note that the denominator \(\sum_{d \in D} \operatorname{rel}_{q}(d)\) is the total number of relevant documents in \(D\), which is a constant.

Typically, we only retrieve a fixed number \(K\) of documents, i.e., \(|R_q| = K\), where \(K\) typically takes 100 to 1000. The precision and recall at a given \(K\) can be computed over the total number of queries \(Q\), that is

Because recall has a fixed denominator, recall will increase as \(K\) increases.

22.2.2. Normalized Discounted Cumulative Gain (NDCG)#

When we are evaluating retrieval and ranking results based on their relevance to the query, we normally evaluate the ranking result in the following way

The ranking result is good if documents with high relevance appear in the top several positions in search engine result list.

We expect documents with different degree of relevance should contribute to the final ranking in proportion to their relevance.

Cumulative Gain (CG) is the sum of the graded relevance scores of all documents in the search result list. CG only considers the relevance of the documents in the search result list, and does not consider the position of these documents in the result list. Given the ranking position of a result list, CG can be defined as:

where \(s_i\) is the relevance score, or custom defined gain, of document \(i\) in the result list. The relevance score of a document is typically provided by human annotators.

Discounted cumulative gain (DCG) is the discounted version of CG. The gain is accumulated from the top of the result list to the bottom, with the gain of each result discounted at lower ranks.

The traditional formula of DCG accumulated at a particular rank position \(k\) is defined as

An alternative formulation of \(DCG\) places stronger emphasis on more relevant documents:

The ideal DCG, IDCG, is computed the same way but by sorting all the candidate documents in the corpus by their relative relevance so that it produces the max possible DCG@k. The normalized DCG, NDCG, is then given by,

Example 22.1

Consider 5 candidate documents with respect to a query. Let their ground truth relevance scores be

which corresponds to a perfect rank of $\(s_1, s_5, s_4, s_2, s_3.\)\( Let the predicted scores be \)\(y_1=0.05, y_2=1.1, y_3=1, y_4=0.5, y_5=0.0,\)\( which corresponds to rank \)\(s_2, s_3, s_4, s_1, s_5.\)$

For \(k=1,2\), we have

For \(k=3\), we have

For \(k=4\), we have

22.2.3. Online Metrics#

When a text ranking model is deployed to serve user’s request, we can also measure the model performance by tracking several online metrics.

Click-through rate and dwell time When a user types a query and starts a search session, we can measure the success of a search session on user’s reactions. On a per-query level, we can define success via click-through rate. The Click-through rate (CTR) measures the ratio of clicks to impressions.

where an impression means a page displayed on the search result page a search engine result page and a click means that the user clicks the page.

One problem with the click-through rate is we cannot simply treat a click as the success of document retrieval and ranking. For example, a click might be immediately followed by a click back as the user quickly realizes the clicked doc is not what he is looking for. We can alleviate this issue by removing clicks that have a short dwell time.

Time to success: Click-through rate only considers the search session of a single query. In real application case, a user’s search experience might span multiple query sessions until he finds what he needs. For example, the users initially search action movies and they do not find that the ideal results and refine the initial query to a more specific one: action movies by Jackie Chan. Ideally, we can measure the time spent by the user in identifying the page he wants as a metrics.

22.3. Traditional Sparse IR Fundamentals#

22.3.1. Exact Match Framework#

Most traditional approaches to ad-hoc retrieval simply count repetitions of the query terms in the document text and assign proper weights to matched terms to calculate a final matching score. This framework, also known as exact term matching, despite its simplicity, serves as a foundation for many IR systems. A variety of traditional IR methods fall into this framework and they mostly differ in different weighting (e.g., tf-idf) and term normalization (e.g., dogs to dog) schemes.

In the exact term matching, we represent a query and a document by a set of their constituent terms, that is, \(q = \{t^{q}_1,...,t^q_M\}\) and \(d = \{t^{d}_1,...,t^d_M\}\). The matching score between \(q\) and \(d\) with respect to a vocabulary \(V\) is given by:

where \(f\) is some function of a term and its associated statistics, the three most important of which are

Term frequency (how many times a term occurs in a document);

Document frequency (the number of documents that contain at least once instance of the term);

Document length (the length of the document that the term occurs in).

Exact term match framework estimates document relevance based on the count of only the query terms in the document. The position of these occurrences and relationship with other terms in the document are ignored.

BM25 are based on exact matching of query and document words, which limits the in- formation available to the ranking model and may lead to problems such vocabulary mismatch

22.3.2. TF-IDF Vector Space Model#

In the vector space model, we represent each query or document by a vector in a high dimensional space. The vector representation has the dimensionality equal to the vocabulary size, and in which each vector component corresponds to a term in the vocabulary of the collection. This query vector representation stands in contrast to the term vector representation of the previous section, which included only the terms appearing in the query. Given a query vector and a set of document vectors, one for each document in the collection, we rank the documents by computing a similarity measure between the query vector and each document

The most commonly used similarity scoring function for a document vector \(\vec{d}\) and a query vector \(\vec{q}\) is the cosine similarity \(\operatorname{Sim}(\vec{d}, \vec{q})\) is computed as

The component value associated with term \(t\) is typically the product of term frequency \(tf(t)\) and inverse document frequency \(idf(t)\). In addition, cosine similarity has a length normalization component that implicitly handles issues related to document length.

Over the years there have been a number of popular variants for both the TF and the IDF functions been proposed and evaluated. A basic version of \(tf(t)\) is given by

where \(f_{t, d}\) is the actual term frequency count of \(t\) in document \(d\). Here the basic intuition is that a term appearing many times in a document should be assigned a higher weight for that document, and the its value should not necessarily increase linearly with the actual term frequency \(f_{t, d}\), hence the logarithm is used to proxy the saturation effect. Although two occurrences of a term should be given more weight than one occurrence, they shouldn’t necessarily be given twice the weight.

A common \(idf(t)\) functions is given by

where \(N_t\) is the number of documents in the corpus that contain the term \(t\) and \(N\) is the total number of documents. Here the basic intuition behind the \(idf\) functions is that a term appearing in many documents should be assigned a lower weight than a term appearing in few documents.

22.3.3. BM25#

One of the most widely adopted exact matching method is called BM25 (short for Okapi BM25)[CMS10, RZ09, YFL18]. BM25 combines overlapping terms, term-frequency (TF), inverse document frequency (IDF), and document length into following formula

where \(tf(t_q, d)\) is the query’s term frequency in the document \(d\), \(|d|\) is the length (in terms of words) of document \(d\), \(avgdl\) is the average length of documents in the collection \(D\), and \(k_{1}\) and \(b\) are parameters that are usually tuned on a validation dataset. In practice, \(k_{1}\) is sometimes set to some default value in the range \([1.2,2.0]\) and \(b\) as \(0.75\). The \(i d f(t)\) is computed as,

At first sight, BM25 looks quite like a traditional \(tf\times idf\) weight - a product of two components, one based on \(tf\) and one on \(idf\). Intuitively, a document \(d\) has a higher BM25 score if

Many query terms also frequently occur in the document;

These frequent co-occurring terms have larger idf values (i.e., they are not common terms).

However, there is one significant difference. The \(tf\) component in the BM25 uses some saturation mechanism to discount the impact of frequent terms in a document when the document length is long.

BM25 does not concerns with word semantics, that is whether the word is a noun or a verb, or the meaning of each word. It is only sensitive to word frequency (i.e., which are common words and which are rare words), and the document length. If one query contains both common words and rare words, this method puts more weight on the rare words and returns documents with more rare words in the query. Besides, a term saturation mechanism is applied to decrease the matching signal when a matched word appears too frequently in the document. A document-length normalization mechanism is used to discount term weight when a document is longer than average documents in the collection.

More specifically, two parameters in BM25, \(k_1\) and \(b\), are designed to perform term frequency saturation and document-length normalization,respectively.

The constant \(k_{1}\) determines how the \(tf\) component of the term weight changes as the frequency increases. If \(k_{1}=0\), the term frequency component would be ignored and only term presence or absence would matter. If \(k_{1}\) is large, the term weight component would increase nearly linearly with the frequency.

The constant \(b\) regulates the impact of the length normalization, where \(b=0\) corresponds to no length normalization, and \(b=1\) is full normalization.

Remark 22.2 (Weighting scheme for long queries)

If the query is long, then we might also use similar weighting for query terms. This is appropriate if the queries are paragraph-long information needs, but unnecessary for short queries.

with \(tf(t_q, q)\) being the frequency of term \(t\) in the query \(q\), and \(k_{2}\) being another positive tuning parameter that this time calibrates term frequency scaling of the query.

22.3.4. BM25 Implementation#

To efficient implementation of BM25, we can pre-compute document side term frequency and store it, which is known as indexing process. This includes:

Tokenize each document into tokens

Compute the number of documents containing each token and token frequency in each document.

Compute the idf for each token using the document frequencies

Compute the BM25 scores for each token in each document \(BM25(t_i,d)\)

During the query process, we tokenize the query \(q\) into tokens \(t_i\) and compute

22.4. Semantic Dense Models#

22.4.1. Motivation#

For ad-hoc search, traditional exact-term matching models (e.g., BM25) are playing critical roles in both traditional IR systems [Fig. 22.5] and modern multi-stage pipelines [Fig. 22.6]. Unfortunately, exact-term matching inherently suffers from the vocabulary mismatch problem due to the fact that a concept is often expressed using different vocabularies and language styles in documents and queries.

Early latent semantic models such as latent semantic analysis (LSA) illustrated the idea of identifying semantically relevant documents for a query when lexical matching is insufficient. However, their effectiveness in addressing the language discrepancy between documents and search queries are limited by their weak modeling capacity (i.e., simple, linear models). Also, these model parameters are typically learned via the unsupervised learning, i.e., by grouping different terms that occur in a similar context into the same semantic cluster.

The introduction of deep neural networks for semantic modeling and retrieval was pioneered in [HHG+13]. Recent deep learning model utilize the neural networks with large learning capacity and user-interaction data for supervised learning, which has led to significance performance gain over LSA. Similarly in the field of OpenQA [KOuguzM+20], whose first stage is to retrieve relevant passages that might contain the answer, semantic-based retrieval has also demonstrated performance gains over traditional retrieval methods.

22.4.2. Two Architecture Paradigms#

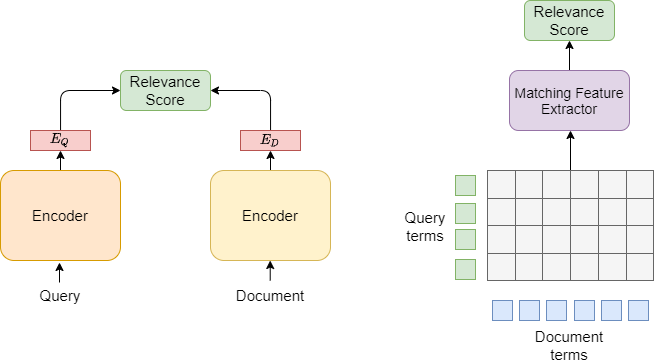

The current neural architecture paradigms for IR can be categorized into two classes: representation-based and interaction-based [Fig. 22.8].

In the representation-based architecture, a query and a document are encoded independently into two embedding vectors, then their relevance is estimated based on a single similarity score between the two embedding vectors.

Here we would like to make a critical distinction on symmetric vs. asymmetric encoding:

For symmetric encoding, the query and the entries in the corpus are typically of the similar length and have the same amount of content and they are encoded using the same network. Symmetric encoding is used for symmetric semantic search. An example would be searching for similar questions. For instance, the query could be How to learn Python online? and the entry that satisfies the search is like How to learn Python on the web?.

For asymmetric encoding, we usually have a short query (like a question or some keywords) and we would like to find a longer paragraph answering the query; they are encoded using two different networks. An example would be information retrieval. The entry is typically a paragraph or a web-page.

In the interaction-based architecture, instead of directly encoding \(q\) and \(d\) into individual embeddings, term-level interaction features across the query and the document are first constructed. Then a deep neural network is used to extract high-level matching features from the interactions and produce a final relevance score.

Fig. 22.8 Two common architectural paradigms in semantic retrieval learning: representation-based learning (left) and interaction-based learning (right).#

These two architectures have different strengths in modeling relevance and final model serving. For example, a representation-based model architecture makes it possible to pre-compute and cache document representations offline, greatly reducing the online computational load per query. However, the pre-computation of query-independent document representations often miss term-level matching features that are critical to construct high-quality retrieval results. On the other hand, interaction-based architectures are often good at capturing the fine-grained matching feature between the query and the document.

Since interaction-based models can model interactions between word pairs in queries and document, they are effective for re-ranking, but are cost-prohibitive for first-stage retrieval as the expensive document-query interactions must be computed online for all ranked documents.

Representation-based models enable low-latency, full-collection retrieval with a dense index. By representing queries and documents with dense vectors, retrieval is reduced to nearest neighbor search, or a maximum inner product search (MIPS) [] problem if similarity is represented by an inner product.

In recent years, there has been increasing effort on accelerating maximum inner product and nearest neighbor search, which led to high-quality implementations of libraries for nearest neighbor search such as hnsw [MY18], FAISS [JDJegou19], and SCaNN [GSL+20].

22.4.3. Classic Representation-based Learning#

22.4.3.1. DSSM#

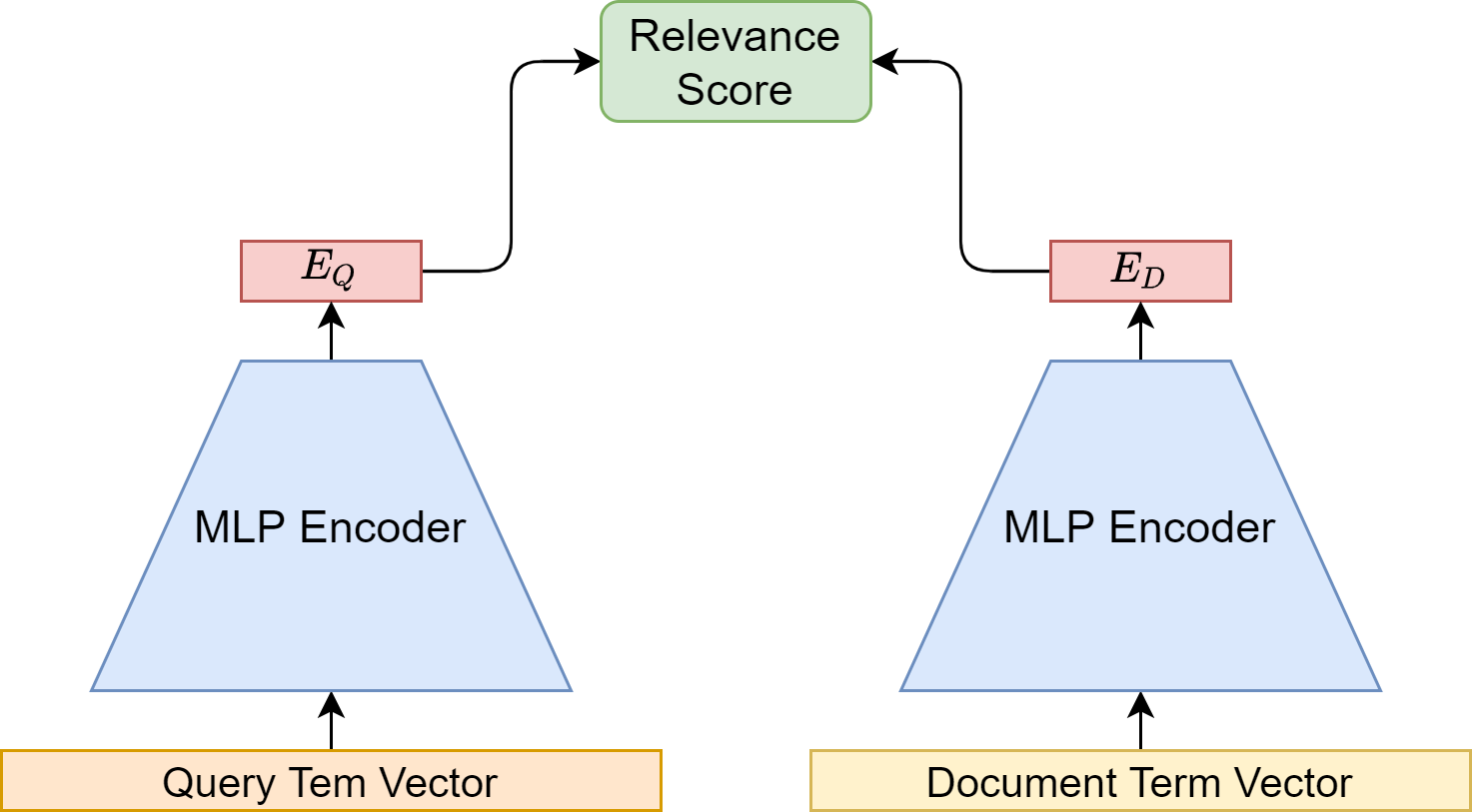

Deep structured semantic model (DSSM) [HHG+13] improves the previous latent semantic models in two aspects: 1) DSSM is supervised learning based on labeled data, while latent semantic models are unsupervised learning; 2) DSSM utilize deep neural networks to capture more semantic meanings.

The high-level architecture of DSSM is illustrated in Fig. 22.9. First, we represent a query and a document (only its title) by a sparse vector, respectively. Second, we apply a non-linear projection to map the query and the document sparse vectors to two low-dimensional embedding vectors in a common semantic space. Finally, the relevance of each document given the query is calculated as the cosine similarity between their embedding vectors in that semantic space.

Fig. 22.9 The architecture of DSSM. Two MLP encoders with shared parameters are used to encode a query and a document into dense vectors. Query and document are both represented by term vectors. The final relevance score is computed via dot product between the query vector and the document vector.#

To represent word features in the query and the documents, DSSM adopt a word level sparse term vector representation with letter 3-gram vocabulary, whose size is approximately \(30k \approx 30^3\). Here 30 is the approximate number of alphabet letters. This is also known as a letter trigram word hashing technique. In other words, both query and the documents will be represented by sparse vectors with dimensionality of \(30k\).

The usage of letter 3-gram vocabulary has multiple benefits compared to the full vocabulary:

Avoid OOV problem with finite-size vocabulary or term vector dimensionality.

The use of letter n-gram can capture morphological meanings of words.

One problem of this method is collision, i.e., two different words could have the same letter n-gram vector representation because this is a bag-of-words representation that does not take into account orders. But the collision probability is rather low.

Word Size |

Letter-Bigram |

Letter-Trigram |

||

|---|---|---|---|---|

Token Size |

Collision |

Token Size |

Collision |

|

40k |

1107 |

18 |

10306 |

2 |

500k |

1607 |

1192 |

30621 |

22 |

Training. The neural network model is trained on the clickthrough data to map a query and its relevant document to vectors that are similar to each other and vice versa. The click-through logs consist of a list of queries and their clicked documents. It is assumed that a query is relevant, at least partially, to the documents that are clicked on for that query.

The semantic relevance score between a query \(q\) and a document \(d\) is given by:

where \(E_{q}\) and \(E_{q}\) are the embedding vectors of the query and the document, respectively. The conditional probability of a document being relevant to a given query is now defined through a Softmax function

where \(\gamma\) is a smoothing factor as a hyperparameter. \(D\) denotes the set of candidate documents to be ranked. While \(D\) should ideally contain all possible documents in the corpus, in practice, for each query \(q\), \(D\) is approximated by including the clicked document \(d^{+}\) and four randomly selected un-clicked documents.

In training, the model parameters are estimated to maximize the likelihood of the clicked documents given the queries across the training set. Equivalently, we need to minimize the following loss function

Evaluation DSSM is compared with baselines of traditional IR models like TF-IDF, BM25, and LSA. Specifically, the best performing DNN-based semantic model, L-WH DNN, uses three hidden layers, including the layer with the Letter-trigram-based Word Hashing, and an output layer, and is discriminatively trained on query-title pairs.

Models |

NDCG@1 |

NDCG@3 |

NDCG@10 |

|---|---|---|---|

TF-IDF |

0.319 |

0.382 |

0.462 |

BM25 |

0.308 |

0.373 |

0.455 |

LSA |

0.298 |

0.372 |

0.455 |

L-WH DNN |

0.362 |

0.425 |

0.498 |

22.4.3.2. CNN-DSSM#

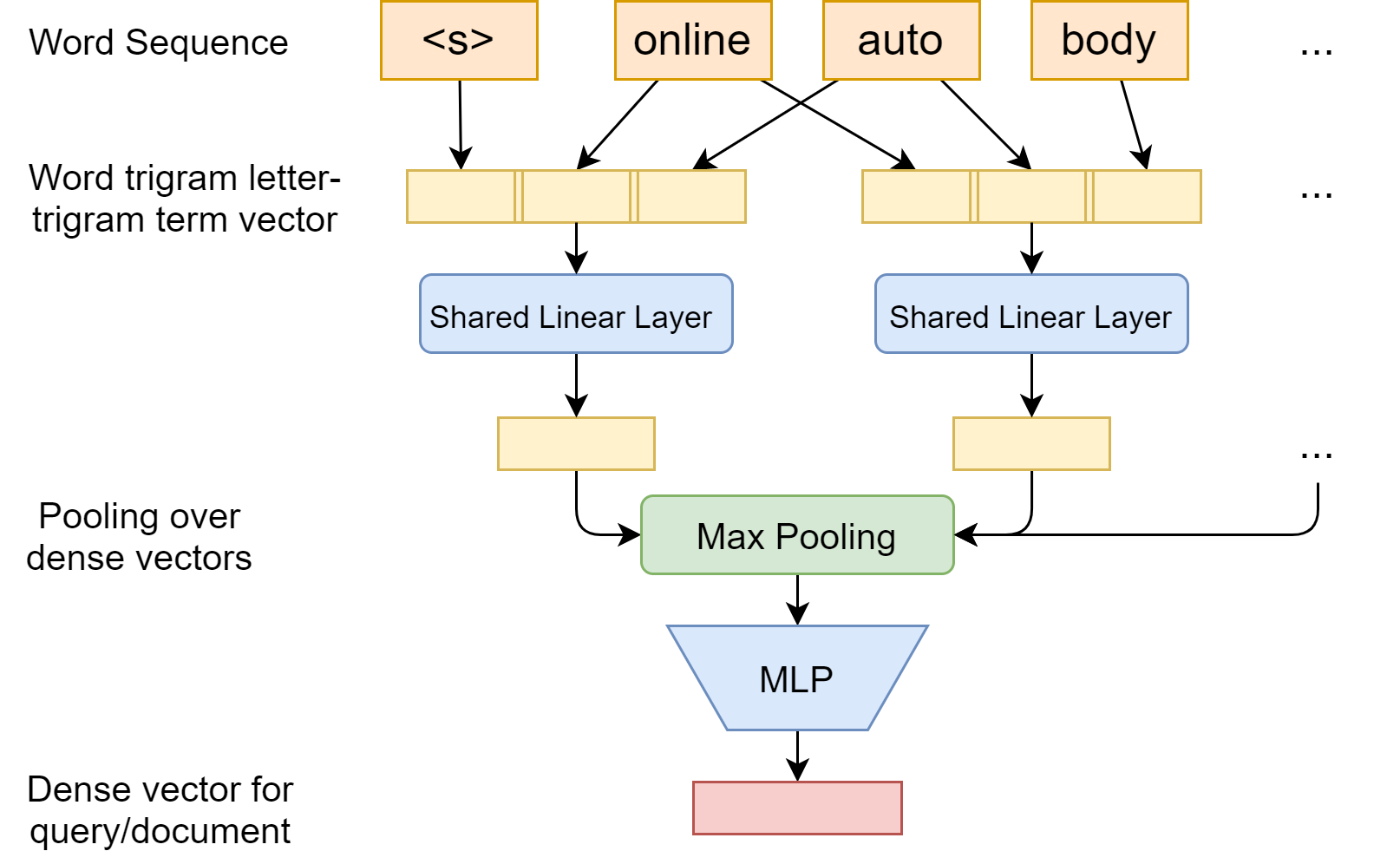

DSSM treats a query or a document as a bag of words, the fine-grained contextual structures embedding in the word order are lost. The DSSM-CNN[SHG+14] [Fig. 22.10] directly represents local contextual features at the word n-gram level; i.e., it projects each raw word n-gram to a low dimensional feature vector where semantically similar word \(\mathrm{n}\) grams are projected to vectors that are close to each other in this feature space.

Moreover, instead of simply summing all local word-n-gram features evenly, the DSSM-CNN performs a max pooling operation to select the highest neuron activation value across all word n-gram features at each dimension. This amounts to extract the sentence-level salient semantic concepts.

Meanwhile, for any sequence of words, this operation forms a fixed-length sentence level feature vector, with the same dimensionality as that of the local word n-gram features.

Given the letter-trigram based word representation, we represent a word-n-gram by concatenating the letter-trigram vectors of each word, e.g., for the \(t\)-th word-n-gram at the word-ngram layer, we have:

where \(f_{t}\) is the letter-trigram representation of the \(t\)-th word, and \(n=2 d+1\) is the size of the contextual window. In our experiment, there are about \(30K\) unique letter-trigrams observed in the training set after the data are lower-cased and punctuation removed. Therefore, the letter-trigram layer has a dimensionality of \(n \times 30 K\).

Fig. 22.10 The architecture of CNN-DSSM. Each term together with its left and right contextual words are encoded together into term vectors.#

22.4.4. Mono-BERT And Duo-BERT#

22.4.4.1. Why Transformers?#

BERT (Bidirectional Encoder Representations from Transformers) [DCLT18] and its transformer variants [LWLQ21] represent the state-of-the-art modeling strategies in a broad range of natural language processing tasks. The application of BERT in information retrieval and ranking was pioneered by [NC19, NYCL19]. The fundamental characteristics of BERT architecture is self-attention. By pretraining BERT on large scale text data, BERT encoder can produce contextualized embeddings can better capture semantics of different linguistic units. By adding additional prediction head to the BERT backbone, such BERT encoders can be fine-tuned to retrieval related tasks. In this section, we will go over the application of different BERT-based models in neural information retrieval and ranking tasks.

22.4.4.2. Mono-BERT (Cross-Encoder) For Point-wise Ranking#

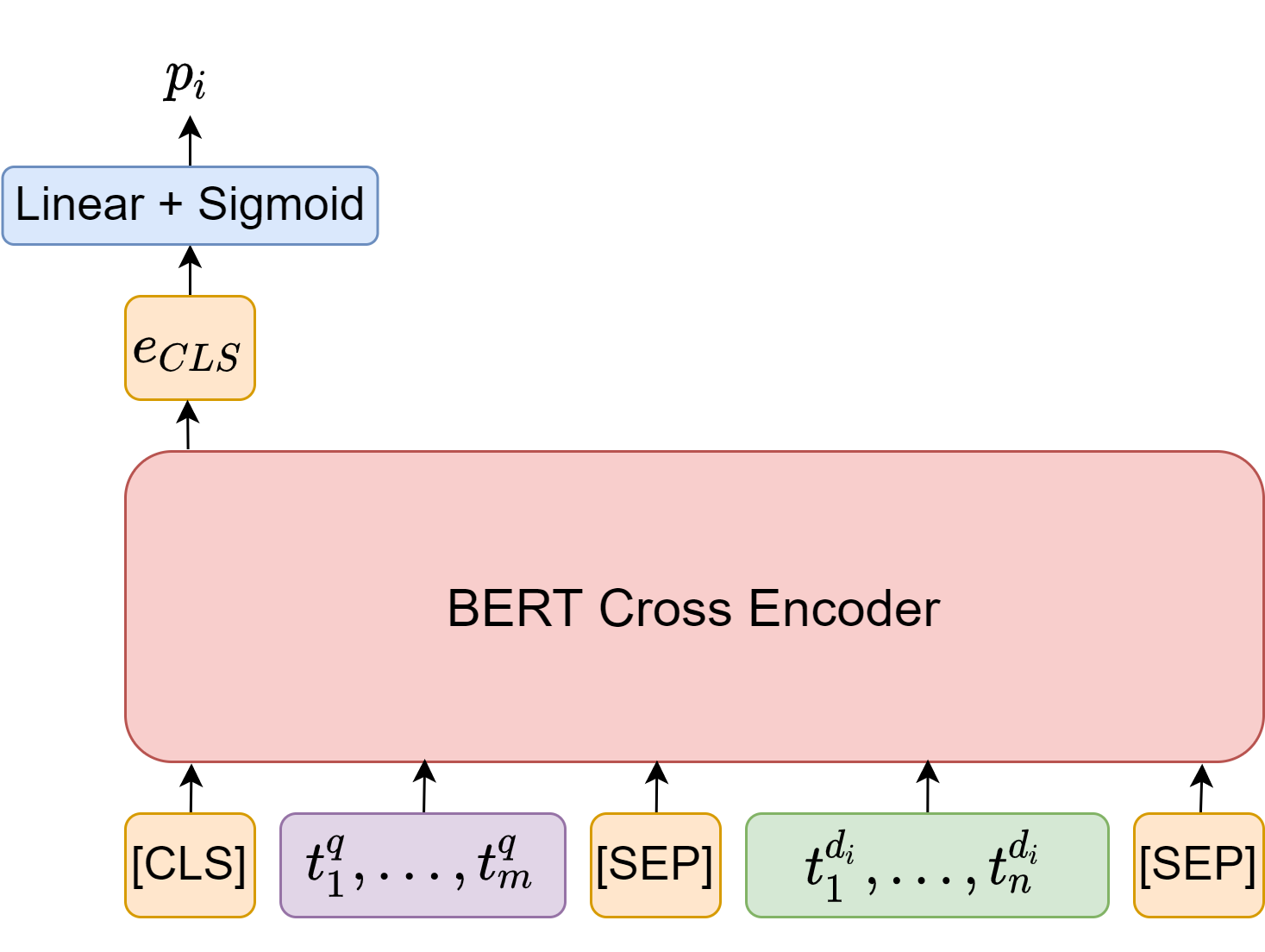

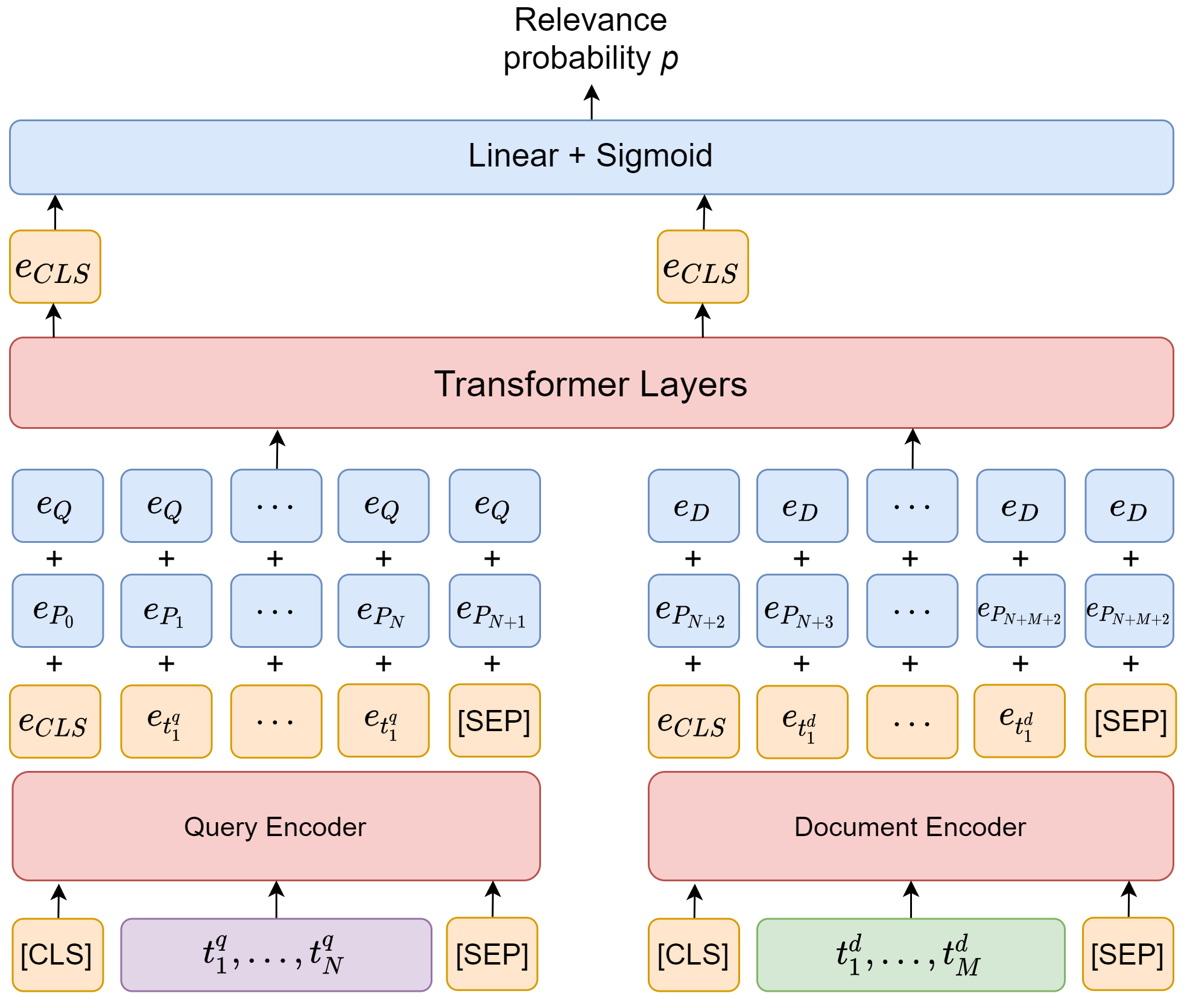

Fig. 22.11 The architecture of Mono-BERT for document relevance ranking. The input is the concatenation of the query token sequence and the candidate document token sequence. Once the input sequence is passed through the model, we use the [CLS] embedding as input to a single layer neural network to obtain a posterior probability \(p_{i}\) of the candidate \(d_{i}\) being relevant to query \(q\).#

The first application of BERT in document retrieval is using BERT as a cross encoder, where the query token sequence and the document token sequence are concatenated via [SEP] token and encoded together. This architecture [Fig. 22.11], called mono-BERT, was first proposed by [NC19, NYCL19].

To meet the token sequence length constraint of a BERT encoder (e.g., 512), we might need to truncate the query (e.g, not greater than 64 tokens) and the candidate document token sequence such that the total concatenated token sequence have a maximum length of 512 tokens.

Once the input sequence is passed through the model, we use the [CLS] embedding as input to a single layer neural network to obtain a posterior probability \(p_{i}\) of the candidate \(d_{i}\) being relevant to query \(q\). The posterior probability can be used to rank documents.

The training data can be represented by a collections of triplets \((q, J_P^q, J_N^q), q\in Q\), where \(Q\) is the set of queries, \(J_{P}^q\) is the set of indexes of the relevant candidates associated with query \(q\) and \(J_{N}^q\) is the set of indexes of the nonrelevant candidates.

The encoder can be fine-tuned using cross-entropy loss:

During training, each batch can consist of a query and its candidate documents (include both positive and negative) produced by previous retrieval layers.

22.4.4.3. Duo-BERT For Pairwise Ranking#

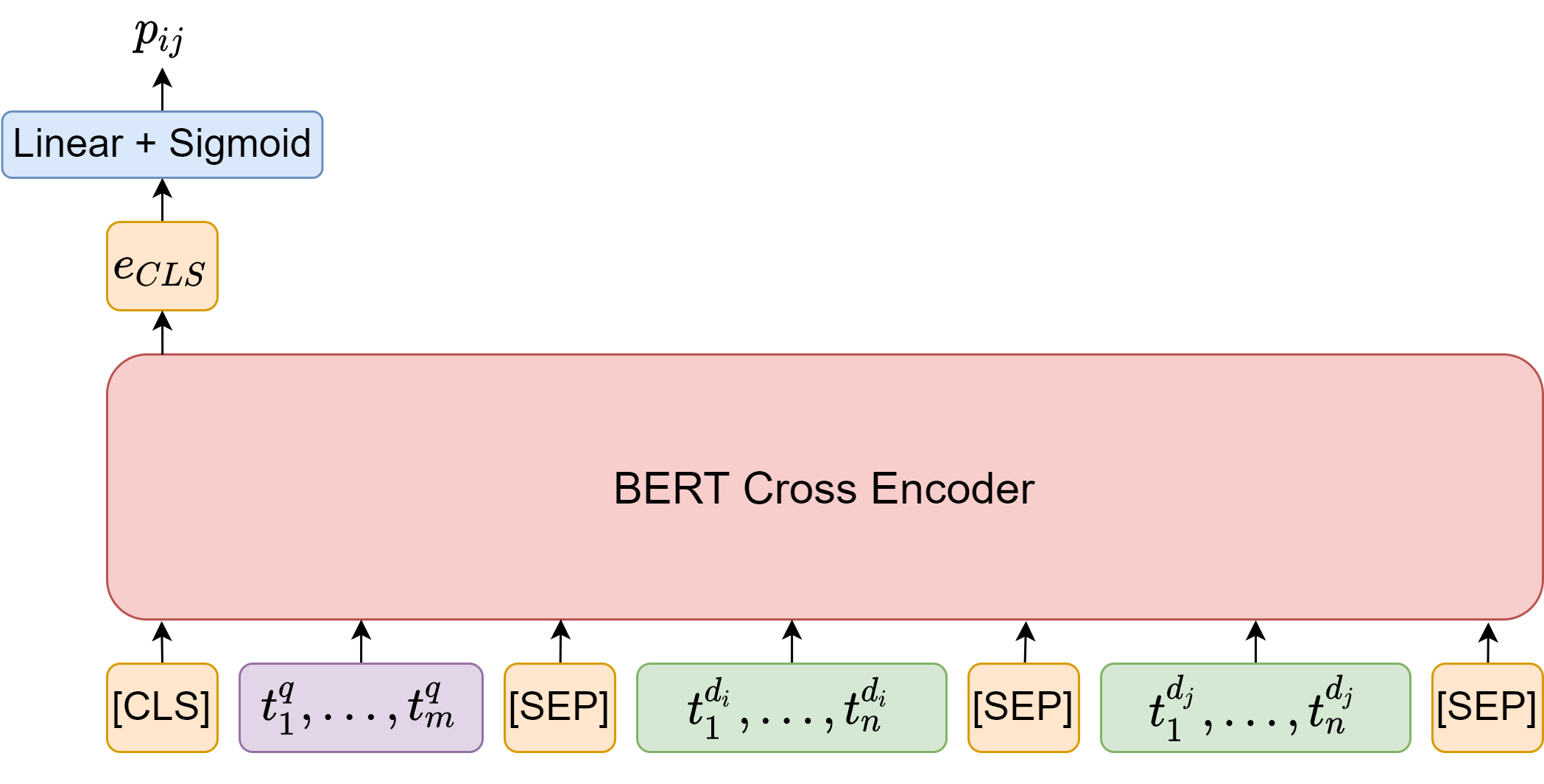

Mono-BERT can be characterized as a pointwise approach for ranking. Within the framework of learning to rank, [NC19, NYCL19] also proposed duo-BERT, which is a pairwise ranking approach. In this pairwise approach, the duo-BERT ranker model estimates the probability \(p_{i, j}\) of the candidate \(d_{i}\) being more relevant than \(d_{j}\) with respect to query \(q\).

The duo-BERT architecture [Fig. 22.12] takes the concatenation of the query \(q\), the candidate document \(d_{i}\), and the candidate document \(d_{j}\) as the input. We also need to truncate the query, candidates \(d_{i}\) and \(d_{j}\) to proper lengths (e.g., 62 , 223 , and 223 tokens, respectively), so the entire sequence will have at most 512 tokens.

Once the input sequence is passed through the model, we use the [CLS] embedding as input to a single layer neural network to obtain a posterior probability \(p_{i,j}\). This posterior probability can be used to rank documents \(i\) and \(j\) with respect to each other. If there are \(k\) candidates for query \(q\), there will be \(k(k-1)\) passes to compute all the pairwise probabilities.

The model can be fine-tune using with the following loss per query.

Fig. 22.12 The duo-BERT architecture takes the concatenation of the query and two candidate documents as the input. Once the input sequence is passed through the model, we use the [CLS] embedding as input to a single layer neural network to obtain a posterior probability that the first document is more relevant than the second document.#

At inference time, the obtained \(k(k -1)\) pairwise probabilities are used to produce the final document relevance ranking given the query. Authors in [NYCL19] investigate five different aggregation methods (SUM, BINARY, MIN, MAX, and SAMPLE) to produce the final ranking score.

where \(J_i = \{1 <= j <= k, j\neq i\}\) and \(J_i(m)\) is \(m\) randomly sampled elements from \(J_i\).

The SUM method measures the pairwise agreement that candidate \(d_{i}\) is more relevant than the rest of the candidates \(\left\{d_{j}\right\}_{j \neq i^{*}}\). The BINARY method resembles majority vote. The Min (MAX) method measures the relevance of \(d_{i}\) only against its strongest (weakest) competitor. The SAMPLE method aims to decrease the high inference costs of pairwise computations via sampling. Comparison studies using MS MARCO dataset suggest that SUM and BINARY give the best results.

22.4.4.4. Multistage Retrieval And Ranking Pipeline#

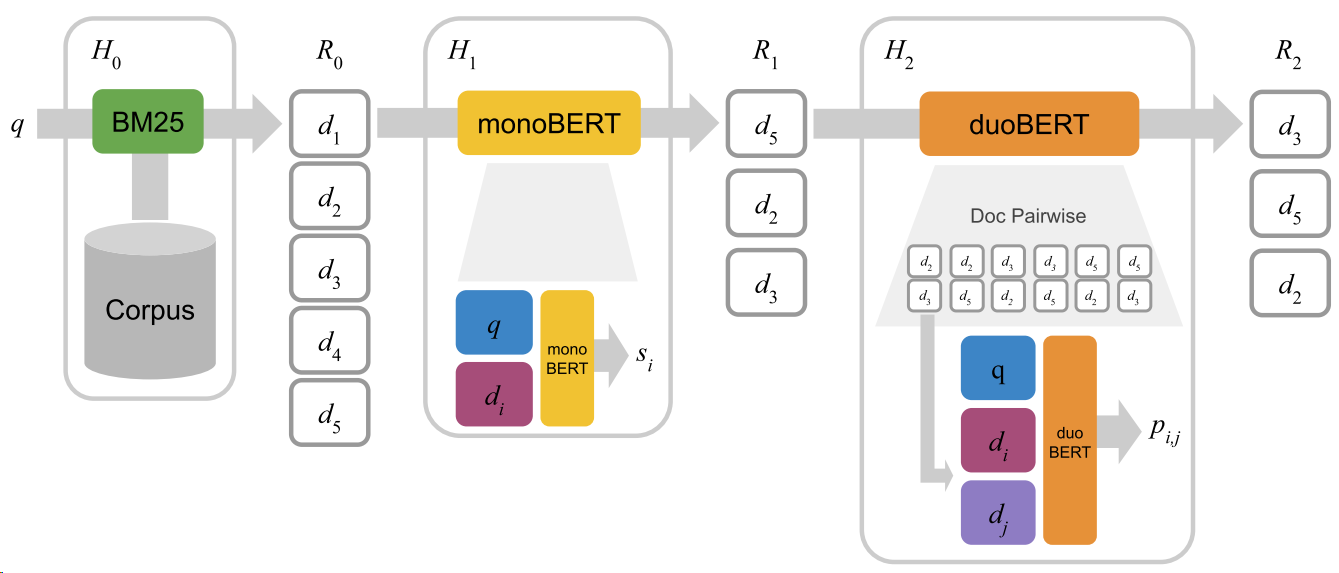

Fig. 22.13 Illustration of a three-stage retrieval-ranking architecture using BM25, monoBERT and duoBERT. Image from [NYCL19].#

With BERT variants of different ranking capability, we can construct a multi-stage ranking architecture to select a handful of most relevant document from a large collection of candidate documents given a query. Consider a typical architecture comprising a number of stages from \(H_0\) ot \(H_N\). \(H_0\) is a exact-term matching stage using from an inverted index. \(H_0\) stage take billion-scale document as input and output thousands of candidates \(R_0\). For stages from \(H_1\) to \(H_N\), each stage \(H_{n}\) receives a ranked list \(R_{n-1}\) of candidates from the previous stage and output candidate list \(R_n\). Typically \(|R_n| \ll |R_{n-1}|\) to enable efficient retrieval.

An example three-stage retrieval-ranking system is shown in Fig. 22.13. In the first stage \(H_{0}\), given a query \(q\), the top candidate documents \(R_{0}\) are retrieved using BM25. In the second stage \(H_{1}\), monoBERT produces a relevance score \(s_{i}\) for each pair of query \(q\) and candidate \(d_{i} \in R_{0}.\) The top candidates with respect to these relevance scores are passed to the last stage \(H_{2}\), in which duoBERT computes a relevance score \(p_{i, j}\) for each triple \(\left(q, d_{i}, d_{j}\right)\). The final list of candidates \(R_{2}\) is formed by re-ranking the candidates according to these scores .

Evaluation. Different multistage architecture configurations are evaluated using the MS MARCO dataset. We have following observations:

Using a single stage of BM25 yields the worst performance.

Adding an additional monoBERT significantly improve the performance over the single BM25 stage architecture.

Adding the third component duoBERT only yields a diminishing gain.

Further, the author found that employing the technique of Target Corpus Pre-training (TCP)\ gives additional performance gain. Specifically, the BERT backbone will undergo a two-phase pre-training. In the first phase, the model is pre-trained using the original setup, that is Wikipedia (2.5B words) and the Toronto Book corpus ( 0.8B words) for one million iterations. In the second phase, the model is further pre-trained on the MS MARCO corpus.

Method |

Dev |

Eval |

|---|---|---|

Anserini (BM25) |

18.7 |

19.0 |

+ monoBERT |

37.2 |

36.5 |

+ monoBERT + duoBERTMAX |

32.6 |

- |

+ monoBERT + duoBERTMIN |

37.9 |

- |

+ monoBERT + duoBERTSUM |

38.2 |

37.0 |

+ monoBERT + duoBERTBINARY |

38.3 |

- |

+ monoBERT + duoBERTSUM + TCP |

39.0 |

37.9 |

22.4.5. Advanced Architectures#

22.4.5.1. DC-BERT#

One way to improve the computational efficiency of cross-encoder is to employ bi-encoders for partial separate encoding and then employ an additional shallow module for cross encoding. One example is the architecture shown in Fig. 22.14, which is called DC-BERT and proposed in [NZG+20]. The overall architecture of DC-BERT consists of a dual-BERT component for decoupled encoding, a Transformer component for question-document interactions, and a binary classifier component for document relevance scoring.

The document encoder can be run offline to pre-encodes all documents and caches all term representations. During online inference, we only need to run the BERT query encodes online. Then the obtained contextual term representations are fed into high-layer Transformer interaction layer.

Fig. 22.14 The overall architecture of DC-BERT [Fig. 22.14] consists of a dual-BERT component for decoupled encoding, a Transformer component for question-document interactions, and a classifier component for document relevance scoring.#

Dual-BERT component. DC-BERT contains two pre-trained BERT models to independently encode the question and each retrieved document. During training, the parameters of both BERT models are fine-tuned to optimize the learning objective.

Transformer component. The dual-BERT components produce contextualized embeddings for both the query token sequence and the document token sequence. Then we add global position embeddings \(\mathbf{E}_{P_{i}} \in \mathbb{R}^{d}\) and segment embedding again to re-encode the position information and segment information (i.e., query vs document). Both the global position and segment embeddings are initialized from pre-trained BERT, and will be fine-tuned. The number of Transformer layers \(K\) is configurable to trade-off between the model capacity and efficiency. The Transformer layers are initialized by the last \(K\) layers of pre-trained BERT, and are fine-tuned during the training.

Classifier component. The two CLS token output from the Transformer layers will be fed into a linear binary classifier to predict whether the retrieved document is relevant to the query. Following previous work (Das et al., 2019; Htut et al., 2018; Lin et al., 2018), we employ paragraph-level distant supervision to gather labels for training the classifier, where a paragraph that contains the exact ground truth answer span is labeled as a positive example. We parameterize the binary classifier as a MLP layer on top of the Transformer layers:

where \(\left(q_{i}, d_{j}\right)\) is a pair of question and retrieved document, and \(o_{[C L S]}\) and \(o_{[C L S]}^{\prime}\) are the Transformer output encodings of the [CLS] token of the question and the document, respectively. The MLP parameters are updated by minimizing the cross-entropy loss.

DC-BERT uses one Transformer layer for question-document interactions. Quantized BERT is a 8bit-Integer model. DistilBERT is a compact BERT model with 2 Transformer layers.

We first compare the retriever speed. DC-BERT achieves over 10x speedup over the BERT-base retriever, which demonstrates the efficiency of this method. Quantized BERT has the same model architecture as BERT-base, leading to the minimal speedup. DistilBERT achieves about 6x speedup with only 2 Transformer layers, while BERT-base uses 12 Transformer layers.

With a 10x speedup, DC-BERT still achieves similar retrieval performance compared to BERT- base on both datasets. At the cost of little speedup, Quantized BERT also works well in ranking documents. DistilBERT performs significantly worse than BERT-base, which shows the limitation of the distilled BERT model.

Model |

SQuAD |

Natural Questions |

||

|---|---|---|---|---|

PTB@10 |

Speedup |

P@10 |

Speedup |

|

BERT-base |

71.5 |

1.0x |

65.0 |

1.0x |

Quantized BERT |

68.0 |

1.1x |

64.3 |

1.1x |

DistilBERT |

56.4 |

5.7x |

60.6 |

5.7x |

DC-BERT |

70.1 |

10.3x |

63.5 |

10.3x |

To further investigate the impact of our model architecture design, we compare the performance of DC-BERT and its variants, including 1) DC-BERT-Linear, which uses linear layers instead of Transformers for interaction; and 2) DC-BERT-LSTM, which uses LSTM and bi- linear layers for interactions following previous work (Min et al., 2018). We report the results in Table 3. Due to the simplistic architecture of the interaction layers, DC-BERT-Linear achieves the best speedup but has significant performance drop, while DC-BERT-LSTM achieves slightly worse performance and speedup than DC-BERT.

Retriever Model |

Retriever P@10 |

Retriever Speedup |

|---|---|---|

DC-BERT-Linear |

57.3 |

43.6x |

DC-BERT-LSTM |

61.5 |

8.2x |

DC-BERT |

63.5 |

10.3x |

22.4.5.2. ColBERT#

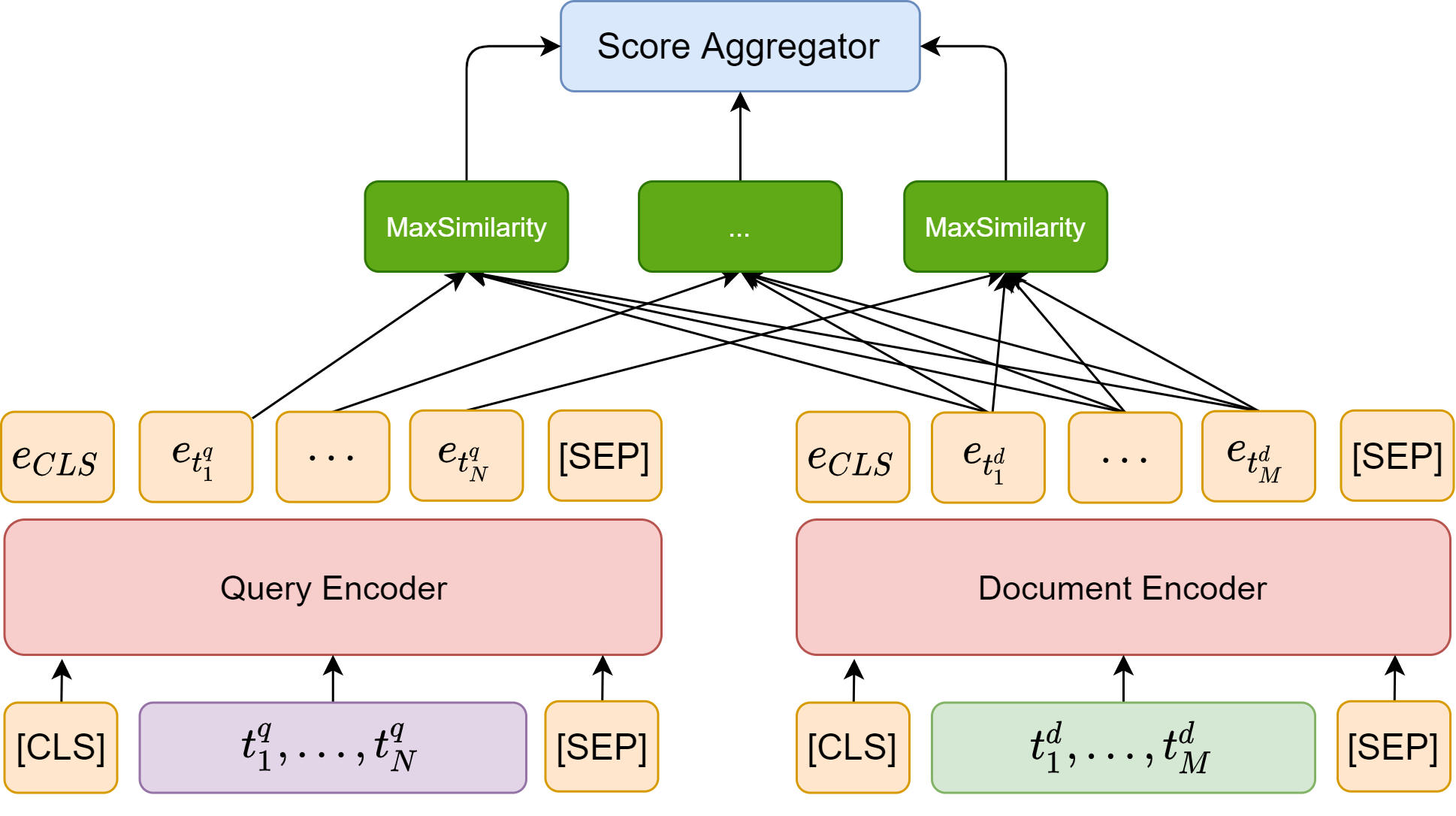

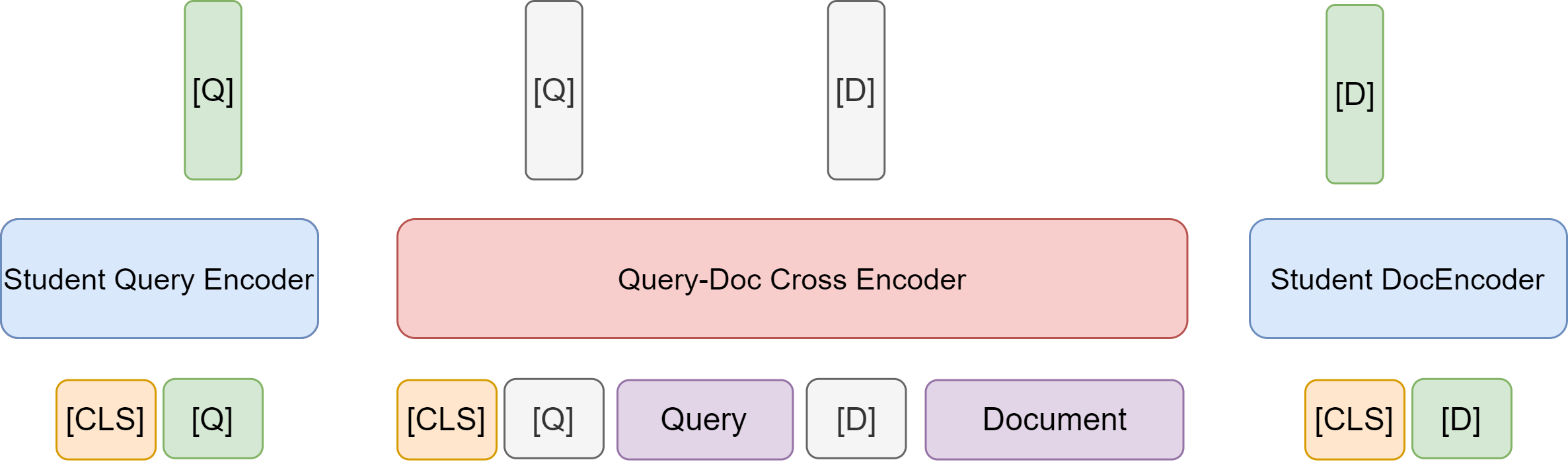

ColBERT [KZ20] is another example architecture that consists of an early separate encoding phase and a late interaction phase, as shown in Fig. 22.15. ColBERT employs a single BERT model for both query and document encoders but distinguish input sequences that correspond to queries and documents by prepending a special token [Q] to queries and another token [D] to documents.

Fig. 22.15 The architecture of ColBERT, which consists of an early separate encoding phase and a late interaction phase.#

The query Encoder take query tokens as the input. Note that if a query is shorter than a pre-defined number \(N_q\), it will be padded with BERT’s special [mask] tokens up to length \(N_q\); otherwise, only the first \(N_q\) tokens will be kept. It is found that the mask token padding serves as some sort of query augmentation and brings perform gain. In additional, a [Q] token is placed right after BERT’s sequence start token [CLS]. The query encoder then computes a contextualized representation for the query tokens.

The document encoder has a very similar architecture. A [D] token is placed right after BERT’s sequence start token [CLS]. Note that after passing through the encoder, embeddings correponding to punctuation symbols are filtered out.

Given BERT’s representation of each token, an additional linear layer with no activation is used to reduce the dimensionality reduction. The reduced dimensionality \(m\) is set much smaller than BERT’s fixed hidden dimension.

Finally, given \(q= q_{1} \ldots q_{l}\) and \(d=d_{1} \ldots d_{n}\), an additional CNN layer is used to allow each embedding vector to interact with its neighbor, yielding the bags of embeddings \(E_{q}\) and \(E_{d}\) in the following manner.

Here # refers to the [mask] tokens and \(\operatorname{Normalize}\) denotes \(L_2\) length normalization.

In the late interaction phase, every query embedding interacts with all document embeddings via a MaxSimilarity operator, which computes maximum similarity (e.g., cosine similarity), and the scalar outputs of these operators are summed across query terms.

Formally, the final similarity score between the \(q\) and \(d\) is given by

where \(I_q = \{1,...,l\}\), \(I_d = \{1, ..., n\}\) are the index sets for query token embeddings and document token embeddings, respectively. ColBERT is differentiable end-to-end and we can fine-tune the BERT encoders and train from scratch the additional parameters (i.e., the linear layer and the \([Q]\) and \([D]\) markers’ embeddings). Notice that the final aggregation interaction mechanism has no trainable parameters.

The retrieval performance of ColBERT is evaluated on MS MARCO dataset. Compared with traditional exact term matching retrieval, ColBERT has shortcomings in terms of latency but MRR is significantly better.

Method |

MRR@10(Dev) |

MRR@10 (Local Eval) |

Latency (ms) |

Recall@50 |

|---|---|---|---|---|

BM25 (official) |

16.7 |

- |

- |

- |

BM25 (Anserini) |

18.7 |

19.5 |

62 |

59.2 |

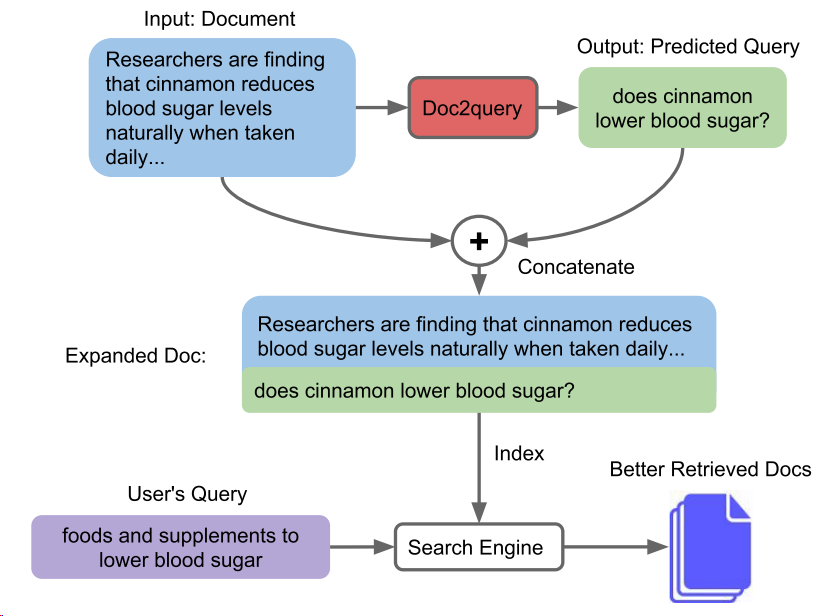

doc2query |

21.5 |

22.8 |

85 |

64.4 |

DeepCT |

24.3 |

- |

62 (est.) |

69[2] |

docTTTTTquery |

27.7 |

28.4 |

87 |

75.6 |

ColBERT L2 (re-rank) |

34.8 |

36.4 |

- |

75.3 |

ColBERTL2 (end-to-end) |

36.0 |

36.7 |

458 |

82.9 |

Similarly, we can evaluate ColBERT’s re-ranking performance against some strong baselines, such as BERT cross encoders [NC19, NYCL19]. ColBERT has demonstrated significant benefits in reducing latency with little cost of re-ranking performance.

Method |

MRR@10 (Dev) |

MRR@10 (Eval) |

Re-ranking Latency (ms) |

|---|---|---|---|

BM25 (official) |

16.7 |

16.5 |

- |

KNRM |

19.8 |

19.8 |

3 |

Duet |

24.3 |

24.5 |

22 |

fastText+ConvKNRM |

29.0 |

27.7 |

28 |

BERT base |

34.7 |

- |

10,700 |

BERT large |

36.5 |

35.9 |

32,900 |

ColBERT (over BERT base) |

34.9 |

34.9 |

61 |

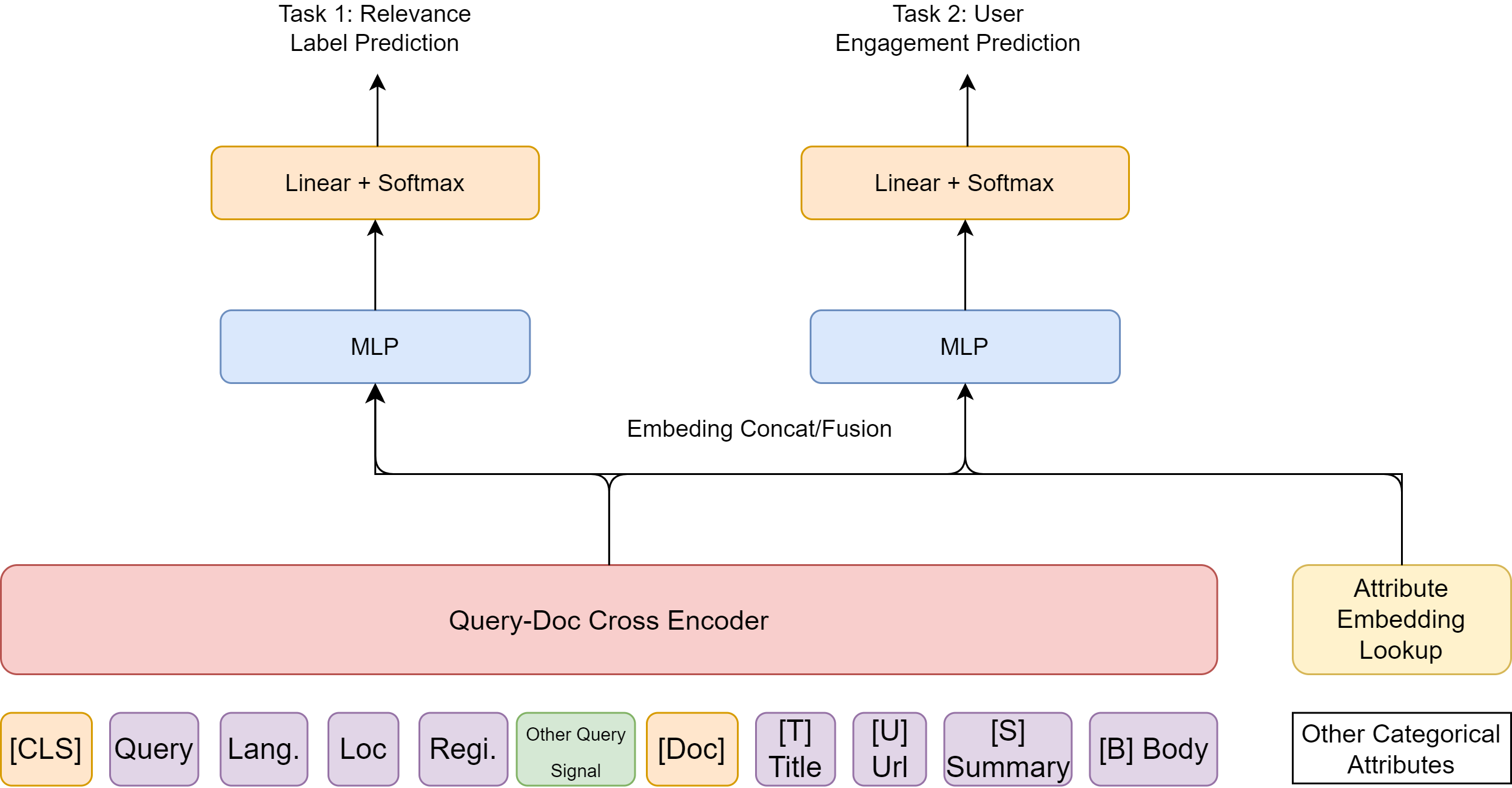

22.4.5.3. Multi-Attribute and Multi-task Modeling#

We can extend cross-encoder to take into multiple-attributes from query side and document side, as well as generating multiple predictive outputs for different tasks [Fig. 22.16].

For example, query side attributes could include

Query text

Query’s language (produced by a cheapter language detection model)

User’s location and region

Other query side signals (e.g., key concept groups in the query, document signals from historical queries) Document side attributes could include

Organic contents with semantic markers (e.g., [T] for Title)

Other derived signals from documents (e.g., puesedo queries, historical queries, etc.) Other high level signals suitable late stage fusion

Document refreshness attribute (for intent to search latest news)

Document spamness attributes

After feature fusion (e.g., via concatination), we can separate MLP head for different tasks

Fig. 22.16 An representative cross-encoder that is extended to take into account multiple-attributes from query side and document side. There are multiple outputs for multi-tasking.#

22.5. Ranker Training#

22.5.1. Overview#

Unlike in classification or regression, the main goal of a ranker[AWB+19] is not to assign a label or a value to individual items, but to produce an ordering of the items in that list in such a way that the utility of the entire list is maximized.

In other words, in ranking we are more concerned with the relative ordering items, instead of predicting the numerical value or label of an individual item.

Pointwise ranking transforms the ranking problem into a regression problem. Given a certain Query, ranking amounts to

Predict the relevance score between the document to the query

Order the document list based on its relevance score with the query.

Pairwise Ranking[Bur10], instead of predicting the absolute relevance, learns to predict the relative order of documents. This method is particularly useful in scenarios where the absolute relevance scores are less important than the relative ordering of items,

Listwise ranking [CQL+07] considers the entire list of items simultaneously when training a ranking model. Unlike pairwise or pointwise methods, listwise ranking directly optimizes ranking metrics such as NDCG or MAP, which better aligns the training objective with the evaluation criteria used in information retrieval tasks. This approach can capture more complex relationships between items in a list and often leads to better performance in real-world ranking scenarios, though it may be computationally more expensive than other ranking methods.

22.5.2. Training Data#

In a typical model learning setting, we construct training data from user search log, which contains queries issued by users and the documents they clicked after issuing the query. The basic assumption is that a query and a document are relevant if the user clicked the document.

Model learning in information retrieval typically falls into the category of contrastive learning. The query and the clicked document form a positive example; the query and irrelevant documents form negative examples. For retrieval problems, it is often the case that positive examples are available explicitly, while negative examples are unknown and need to be selected from an extremely large pool. The strategy of selecting negative examples plays an important role in determining quality of the encoders. In the most simple case, we randomly select unclicked documents as irrelevant document, or negative example. We defer the discussion of advanced negative example selecting strategy to Section 22.6.

When there is a shortage of annotation data or click behavior data, we can also leverage weakly supervised data for training [DZS+17, HG19, NSN18, RSL+21]. In the weakly supervised data, labels or signals are obtained from an unsupervised ranking model, such as BM25. For example, given a query, relevance scores for all documents can be computed efficiently using BM25. Documents with highest scores can be used as positive examples and documents with lower scores can be used as negatives or hard negatives.

22.5.3. Model Training Objective Functions#

22.5.3.1. Pointwise Regression Objective#

The idea of pointwise regression objective is to model the numerical relevance score for a given query-document. During inference time, the relevance scores between a set of candidates and a given query can be predicted and ranked.

During training, given a set of query-document pairs \(\left(q_{i}, d_{i, j}\right)\) and their corresponding relevance score \(y_{i, j} \in [0, 1]\) and their prediction \(f(q_i,d_{i,j})\). A pointwise regression objective tries to optimize a model to predict the relevance score via minimization

Using a regression objective offer flexible for the user to model different levels of relevance between queries and documents. However, such flexibility also comes with** a requirement that the target relevance score should be accurate in absolute scale.** While human annotated data might provide absolute relevance score, human annotation data is expensive and small scale. On the other hand, absolute relevance scores that are approximated by click data can be noisy and less optimal for regression objective. To make** training robust to label noises**, one can consider Pairwise ranking objectives. This particularly important in weak supervision scenario with noisy label. Using the ranking objective alleviates this issue by forcing the model to learn a preference function rather than reproduce absolute scores.

22.5.3.2. Pointwise Ranking Objective#

The idea of pointwise ranking objective is to simplify a ranking problem to a binary classification problem. Specifically, given a set of query-document pairs \(\left(q_{i}, d_{i, j}\right)\) and their corresponding relevance label \(y_{i, j} \in \{0, 1\}\), where 0 denotes irrelevant and 1 denotes relevant. A pointwise learning objective tries to optimize a model to predict the relevance label.

A commonly used pointwise loss functions is the binary Cross Entropy loss:

where \(p\left(q_{i}, d{i, j}\right)\) is the predicted probability of document \(d_{i,j}\) being relevant to query \(q_i\).

The advantages of pointwise ranking objectives are two-fold. First, pointwise ranking objectives are computed based on each query-document pair \(\left(q_{i}, d_{i, j}\right)\) separately, which makes it simple and easy to scale. Second, the outputs of neural models learned with pointwise loss functions often have real meanings and value in practice. For instance, in sponsored search, the predicted the relevance probability can be used in ad bidding, which is more important than creating a good result list in some application scenarios.

In general, however, pointwise ranking objectives are considered to be less effective in ranking tasks. Because pointwise loss functions consider no document preference or order information, they do not guarantee to produce the best ranking list when the model loss reaches the global minimum. Therefore, better ranking paradigms that directly optimize document ranking based on pairwise loss functions and even listwise loss functions.

22.5.3.3. Pairwise Ranking via Triplet Loss#

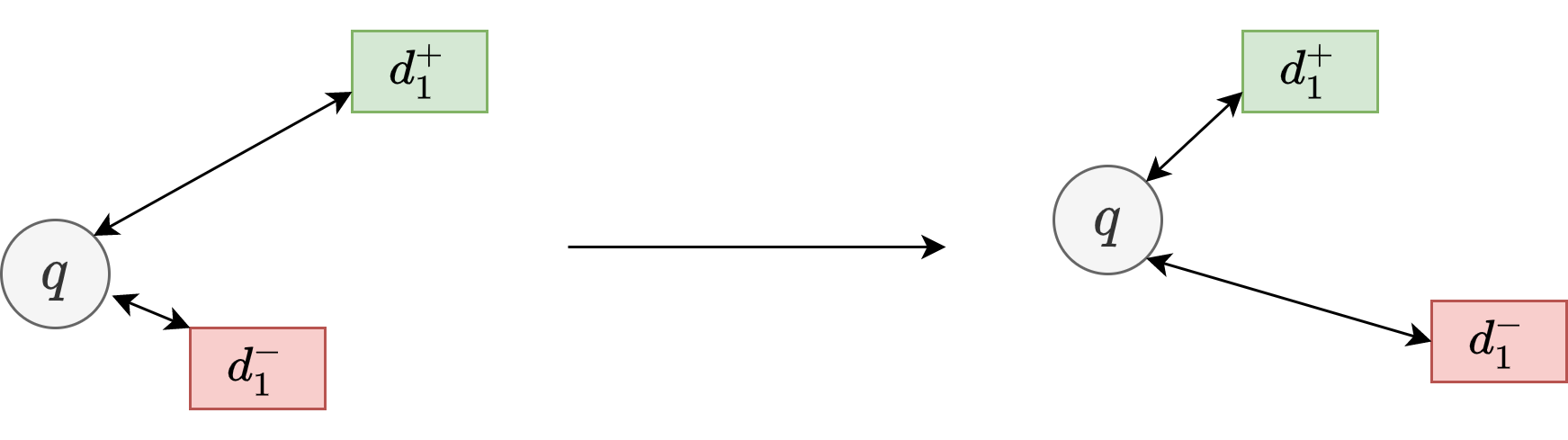

Pointwise ranking loss aims to optimize the model to directly predict relevance between query and documents on absolute score. From embedding optimization perspective, it train the neural query/document encoders to produce similar embedding vectors for a query and its relevant document and dissimilar embedding vectors for a query and its irrelevant documents.

On the other hand, pairwise ranking objectives focus on optimizing the relative preferences between documents rather than predicting their relevance labels. In contrast to pointwise methods where the final ranking loss is the sum of loss on each document, pairwise loss functions are computed based on the different combination of document pairs.

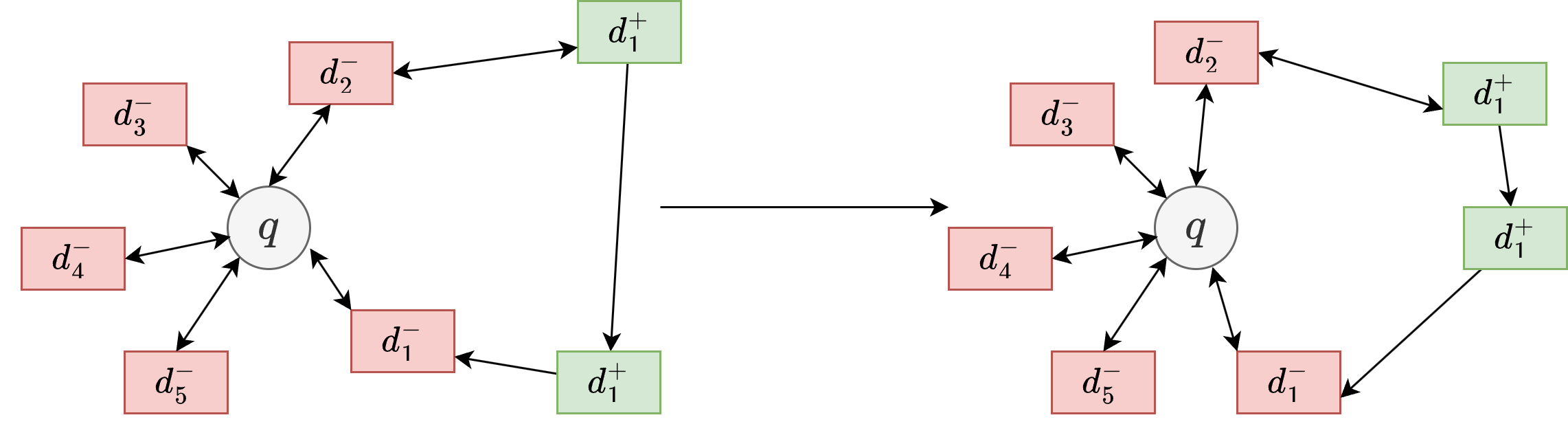

One of the most common pairwise ranking loss function is the triplet loss. Let \(\mathcal{D}=\left\{\left\langle q_{i}, d_{i}^{+}, d_{i}^{-}\right\rangle\right\}_{i=1}^{m}\) be the training data organized into \(m\) triplets. Each triplet contains one query \(q_{i}\) and one relevant document \(d_{i}^{+}\), along with one irrelevant (negative) documents \(d_{i}^{-}\). Negative documents are typically randomly sampled from a large corpus or are strategically constructed [Section 22.6]. Visualization of the learning process in the embedding space is shown in Fig. 22.17. Triplet loss helps guide the encoder networks to pull relevant query and document closer and push irrelevant query and document away.

The loss function is given by

where \(\operatorname{Sim}(q, d)\) is the similarity score produced by the network between the query and the document and \(m\) is a hyper-parameter adjusting the margin. Clearly, if we would like to make \(L\) small, we need to make \(\operatorname{Sim}(q_i, d_i^+) - \operatorname{Sim}(q_i, d^-_i) > m\). Commonly used \(\operatorname{Sim}\) functions include dot product or Cosine similarity (i.e., length-normalized dot product), which are related to distance calculation in the Euclidean space and hyperspherical surface.

Fig. 22.17 The illustration of the learning process (in the embedding space) using triplet loss.#

Triplet loss can also operating in the angular space

As illustrated in Figure 1, the training objective is to score the positive example \(d^{+}\)by at least the margin \(\mu\) higher than the negative one \(d^{-}\). As part of our loss function, we use the triplet margin objective:

22.5.3.4. N-pair Loss#

Triplet loss optimize the neural by encouraging positive pair \((q_i, d^+_i)\) to be more similar than its negative pair \((q_i, d^+_i)\). One improvement is to encourage \(q_i\) to be more similar \(d^+_i\) compared to \(n\) negative examples \( d_{i, 1}^{-}, \cdots, d_{i, n}^{-}\), instead of just one negative example. This is known as N-pair loss [Soh16], and it is typically more robust than triplet loss.

Let \(\mathcal{D}=\left\{\left\langle q_{i}, d_{i}^{+}, D_i^-\right\rangle\right\}_{i=1}^{m}\), where \(D_i^- = \{d_{i, 1}^{-}, \cdots, d_{i, n}^{-}\}\) are a set of negative examples (i.e., irrelevant document) with respect to query \(q_i\), be the training data that consists of \(m\) examples. Each example contains one query \(q_{i}\) and one relevant document \(d_{i}^{+}\), along with \(n\) irrelevant (negative) documents \(d_{i, j}^{-}\). The \(n\) negative documents are typically randomly sampled from a large corpus or are strategically constructed [Section 22.6].

Visualization of the learning process in the embedding space is shown in Fig. 22.18. Like triplet loss, N-pair loss helps guide the encoder networks to pull relevant query and document closer and push irrelevant query and document away. Besides that, when there are are negatives are involved in the N-pair loss, their repelling to each other appears to help the learning of generating more uniform embeddings[WI20].

The loss function is given by

where \(\operatorname{Sim}(e_q, e_d)\) is the similarity score function taking query embedding \(e_q\) and document embedding \(e_d\) as the input.

Fig. 22.18 The illustration of the learning process (in the embedding space) using N-pair loss.#

22.5.3.5. N-pair Dual Loss#

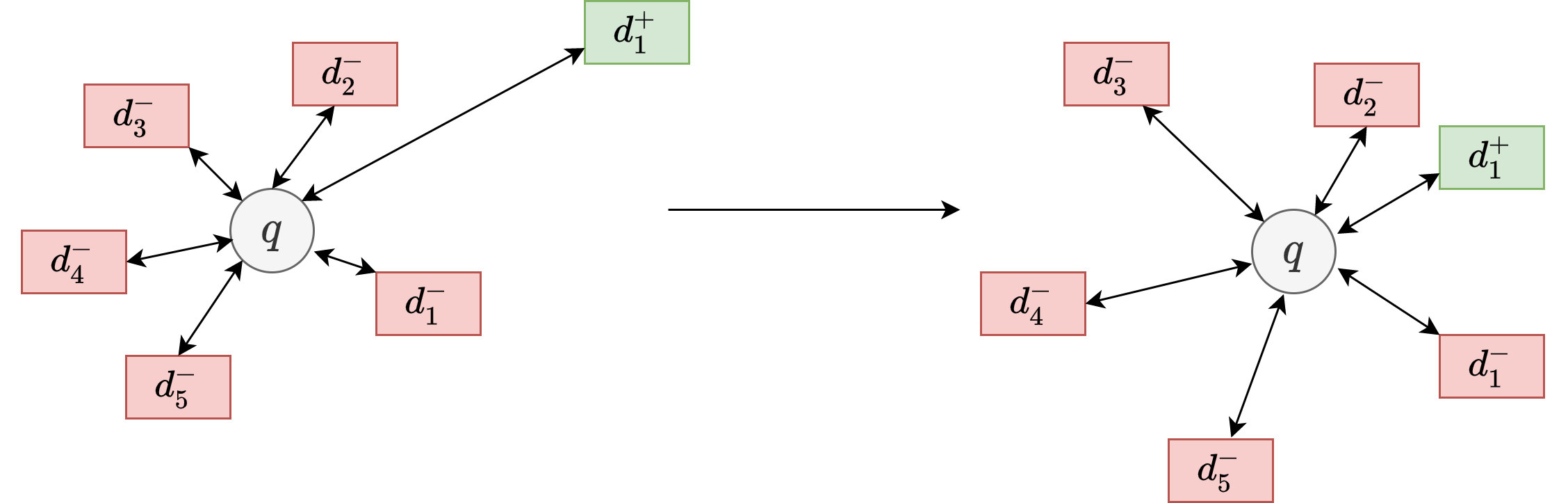

Fig. 22.19 The illustration of the learning process (in the embedding space) using N-pair dual loss.#

The N-pair loss uses query as the anchor to adjust the distribution of document vectors in the embedding space. Authors in [LLXL21] proposed that document can also be as the anchor to adjust the distribution of query vectors in the embedding space. This leads to loss functions consisting of two parts

where \(\operatorname{Sim}(e_q, e_d)\) is a symmetric similarity score function for the query and the document embedding vectors, \(L_{prime}\) is the N-pair loss, and \(L_{dual}\) is the N-pair dual loss.

To compute dual loss, we need to prepare training data \(\mathcal{D}_{dual}=\left\{\left\langle d_{i}, q_{i}^{+}, Q_i^-\right\rangle\right\}_{i=1}^{m}\), where \(Q_i^- = \{q_{i, 1}^{-}, \cdots, q_{i, n}^{-}\}\) are a set of negative queries examples (i.e., irrelevant query) with respect to document \(d_i\). Each example contains one document \(d_{i}\) and one relevant query \(d_{i}^{+}\), along with \(n\) irrelevant (negative) queries \(q_{i, j}^{-}\).

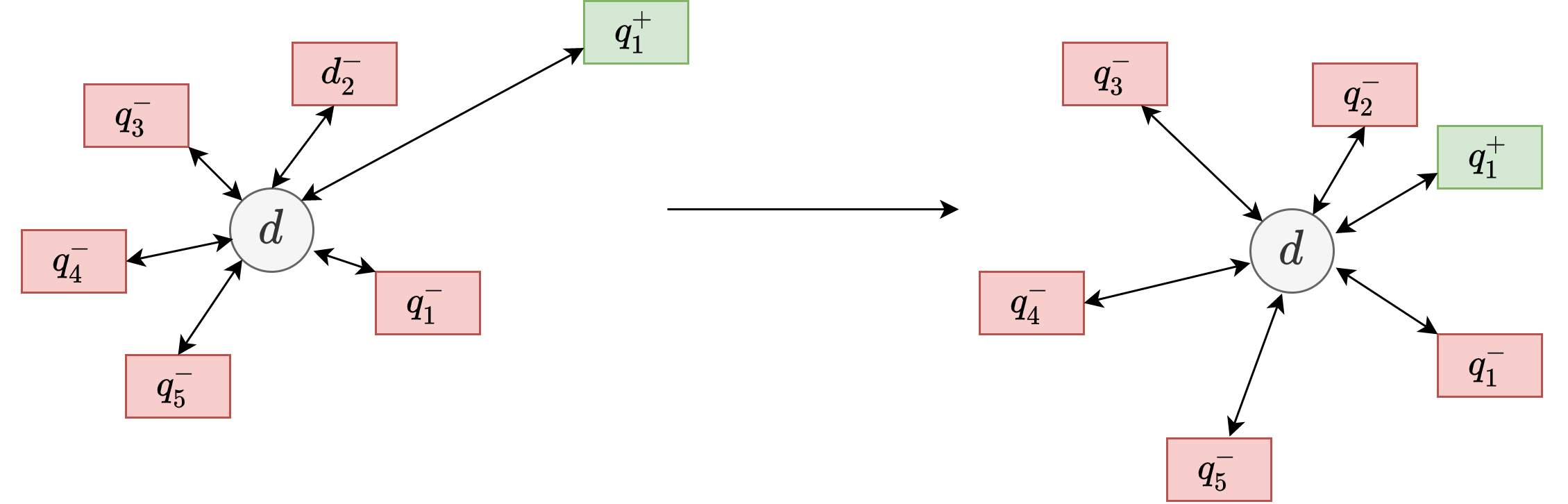

22.5.3.6. Doc-Doc N-pair Loss#

Fig. 22.20 The illustration of the learning process (in the embedding space) using Doc-Doc N-pair loss.#

Besiding use above prime and dual loss to capture robust query doc relationship, we can also improve robustness of document representation by considering doc-doc relations. Particularly,

When there are multiple positive documents associated with the same query, we use loss function encourage their representation embedding to stay close.

For positive and negative documents associated with the same query, we use loss function encourage their representation embedding to stay far apart.

The loss function is given by

where \(\operatorname{Sim}(e_{d_1}, e_{d_2})\) is the similarity score function taking document embeddings \(e_{d_1}\) and \(e_{d_2}\) as the input.

22.6. Training Data Sampling Strategies#

22.6.1. Principles#

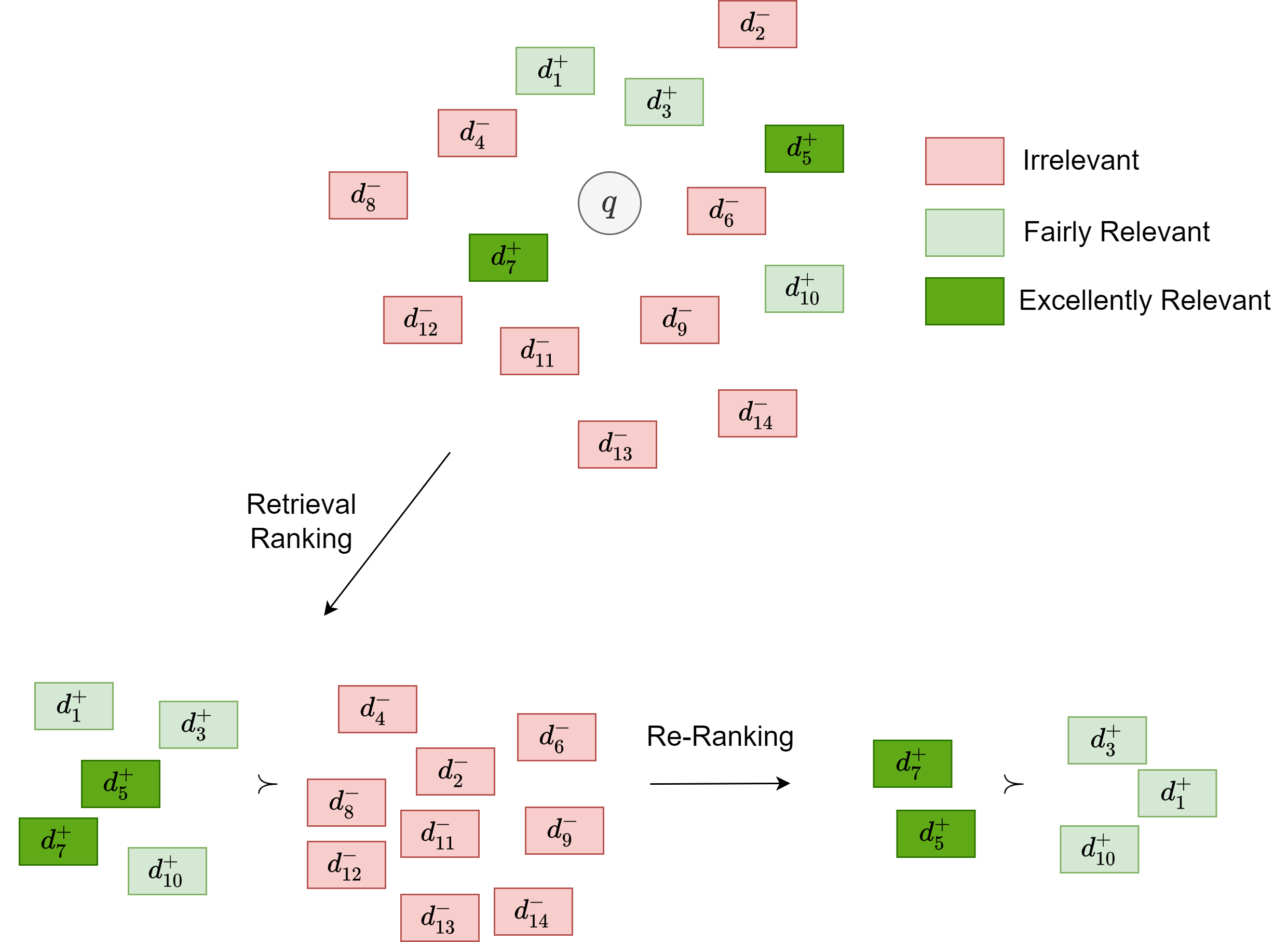

From the ranking perspective, both retrieval and re-ranking requires the generation of some order on the input samples. For example, given a query \(q\) and a set of candidate documents \((d_1,...,d_N)\). We need the model to produce an order list \(d_2 \succ d_3 ... \succ d_k\) according to their relevance to the query.

To train a model to produce the expected results during inference, we should ensure the training data distribution to matched with the inference time data distribution. Particularly, the inference time the candidate document distribution and ranking granularity differ vastly for retrieval tasks and re-ranking tasks [Fig. 22.21]. Specifically,

For the retrieval task, we typically need to identify top \(k (k=1000-10000)\) relevant documents from the entire document corpus. This is achieved by ranking all documents in the corpus with respect to the relevance of the query.

For the re-ranking task, we need to identify the top \(k (k=10)\) most relevant documents from the relevant documents generated by the retrieval task.

Clearly, features most useful in the retrieval task (i.e., distinguish relevant from irrelevant) are often not the same as the features most useful in re-ranking task (i.e., distinguish most relevant from less relevant). Therefore, the training samples for retrieval and re-ranking need to be constructed differently.

Fig. 22.21 Retrieval tasks and re-ranking tasks are faced with different the candidate document distribution and ranking granularity.#

Constructing the proper training data distribution is more challenging to retrieval stage than the re-ranking stage. In re-ranking stage, data in the training and inference phases are both the documents from previous retrieval stages. In the retrieval stage, we need to construct training examples in a mini-batch fashion in a way that each batch approximates the distribution in the inference phase as close as possible.

This section will mainly focus on constructing training examples for retrieval model training in an efficient and effective way. Since the number of negative examples (i.e., irrelevant documents) significantly outnumber the number of positive examples. Constructing training examples particularly boil down to constructing proper negative examples.

22.6.2. Negative Sampling Methods I: Heuristic Methods#

22.6.2.1. Random Negatives and In-batch Negatives#

Random negative sampling is the most basic negative sampling algorithm. The algorithm uniformly sample documents from the corpus and treat it as a negative. Clearly, random negatives can generate negatives that are too easy for the model. For example, a negative document that is topically different from the query. These easy negatives lower the learning efficiency, that is, each batch produces limited information gain to update the model. Still, random negatives are widely used because of its simplicity.

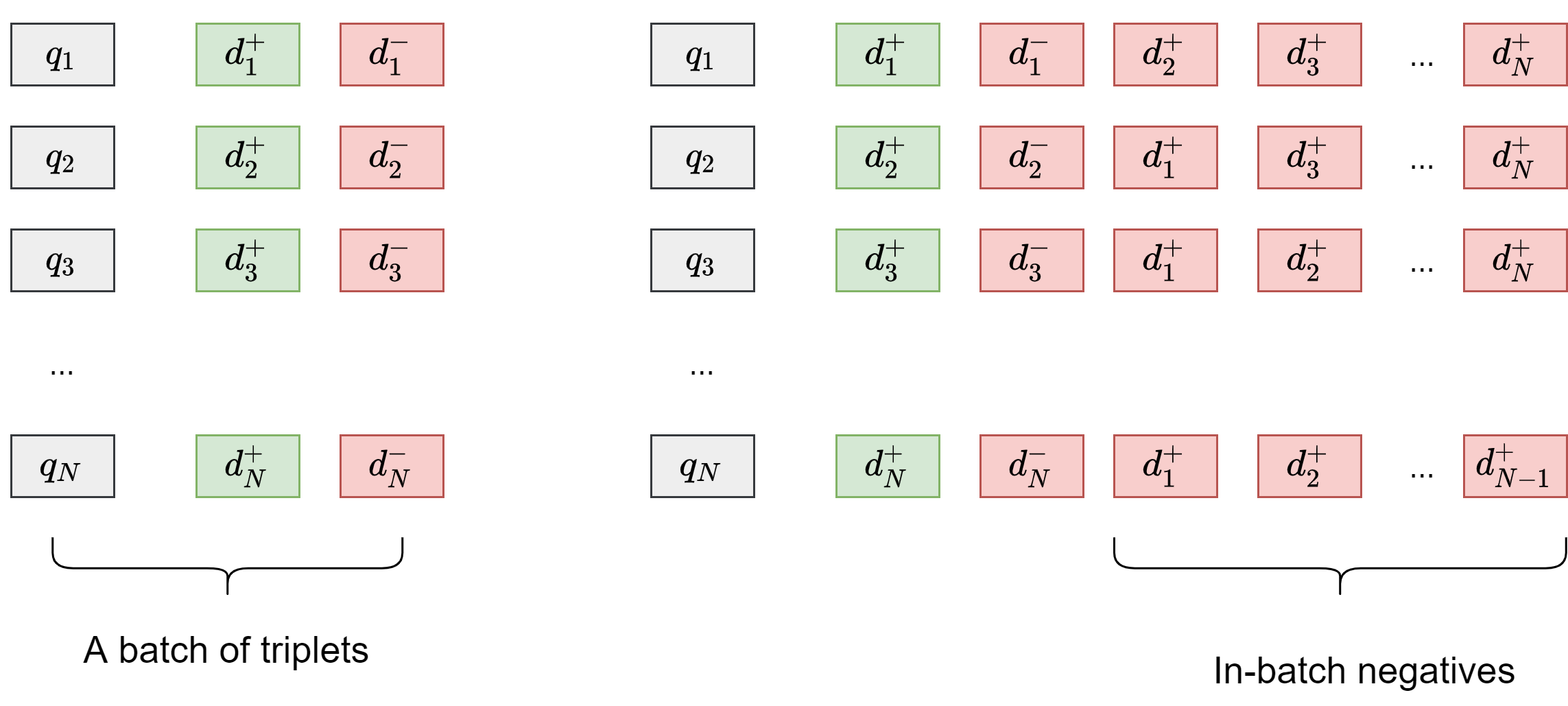

In practice, random negatives are implemented as in-batch negatives. In the contrastive learning framework with N-pair loss [Section 22.5.3.4], we construct a mini-batch of query-doc examples like \(\{(q_1, d_1^+, d_{1,1}^-, d_{1,M}^-), ..., (q_N, d_N^+, d_{N,1}^-, d_{N,M}^M)\}\), Naively implementing N-pair loss would increase computational cost from constructing sufficient negative documents corresponding to each query. In-batch negatives[KOuguzM+20] is trick to reuse positive documents associated with other queries as extra negatives [Fig. 22.22]. The critical assumption here is that queries in a mini-batch are vastly different semantically, and positive documents from other queries would be confidently used as negatives. The assumption is largely true since each mini-batch is randomly sampled from the set of all training queries, in-batch negative document are usually true negative although they might not be hard negatives.

Fig. 22.22 The illustration of using in-batch negatives in contrastive learning.#

Specifically, assume that we have \(N\) queries in a mini-batch and each one is associated with a relevant positive document. By using positive document of other queries, each query will have an additional \(N - 1\) negatives.

Formally, we can define our batch-wise loss function as follows:

where \(l\left(q_{i}, d_{i}^{+}, d_{j}^{-}\right)\) is the loss function for a triplet.

In-batch negative offers an efficient implementation for random negatives. Another way to mitigate the inefficient learning issue is simply use large batch size (>4,000) [Large-Scale Negatives].

22.6.2.2. Popularity-based Negative Sampling#

Popularity-based negative sampling use document popularity as the sampling weight to sample negative documents. The popularity of a document can be defined as some combination of click, dwell time, quality, etc. Compared to random negative sampling, this algorithm replaces the uniform distribution with a popularity-based sampling distribution, which can be pre-computed offline.

The major rationale of using popularity-based negative examples is to improve representation learning. Popular negative documents represent a harder negative compared to a unpopular negative since they tend to have to a higher chance of being more relevant; that is, lying closer to query in the embedding space. If the model is trained to distinguish these harder cases, the over learned representations will be likely improved.

Popularity-based negative sampling is also used in word2vec training [MSC+13]. For example, the probability to sample a word \(w_i\) is given by:

where \(f(w)\) is the frequency of word \(w\). This equation, compared to linear popularity, has the tendency to increase the probability for less frequent words and decrease the probability for more frequent words.

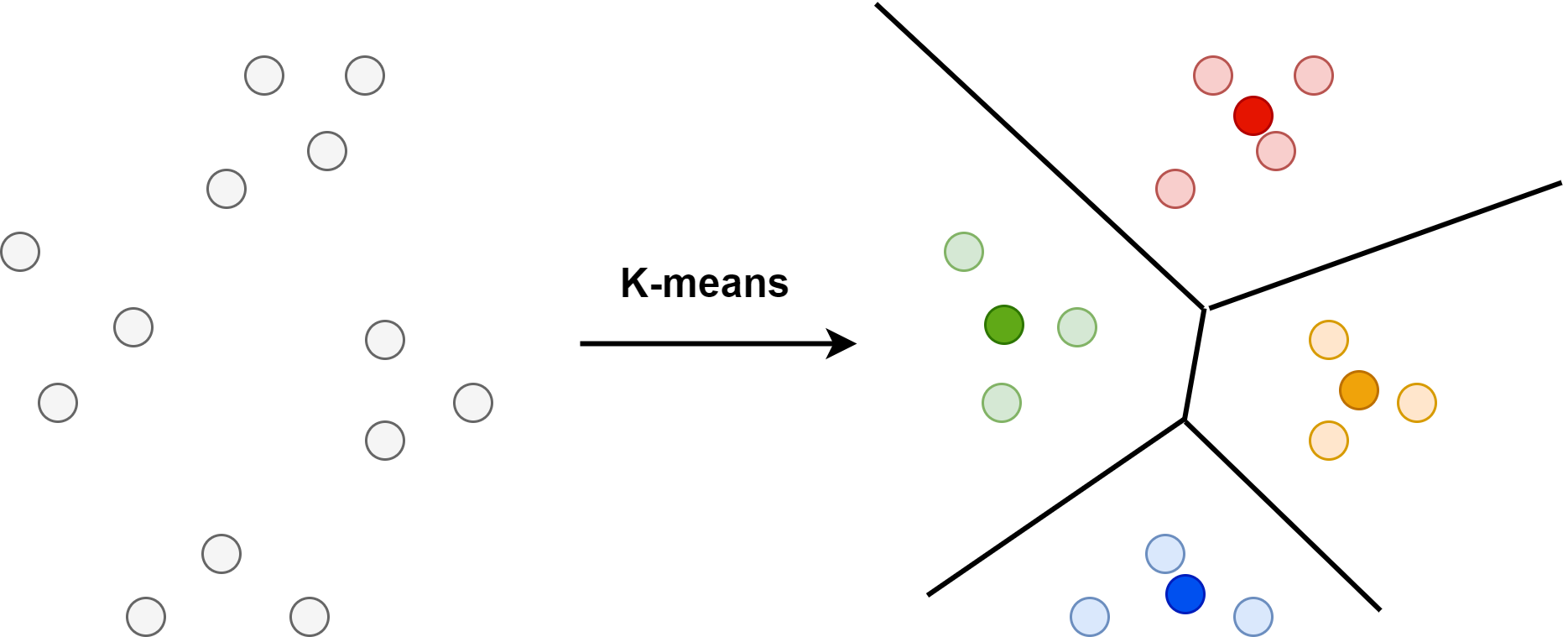

22.6.2.3. Topic-aware Negative Sampling#

In-batch random negatives would often consist of topically-different documents, leaving little information gain for the training. To improve the information gain from a single random batch, we can constrain the queries and their relevant document are drawn from a similar topic[HofstatterLY+21].

The procedures are

Cluster queries using query embeddings produced by basic query encoder.

Sample queries and their relevant documents from a randomly picked cluster. A relevant document of a query form the negative of the other query.

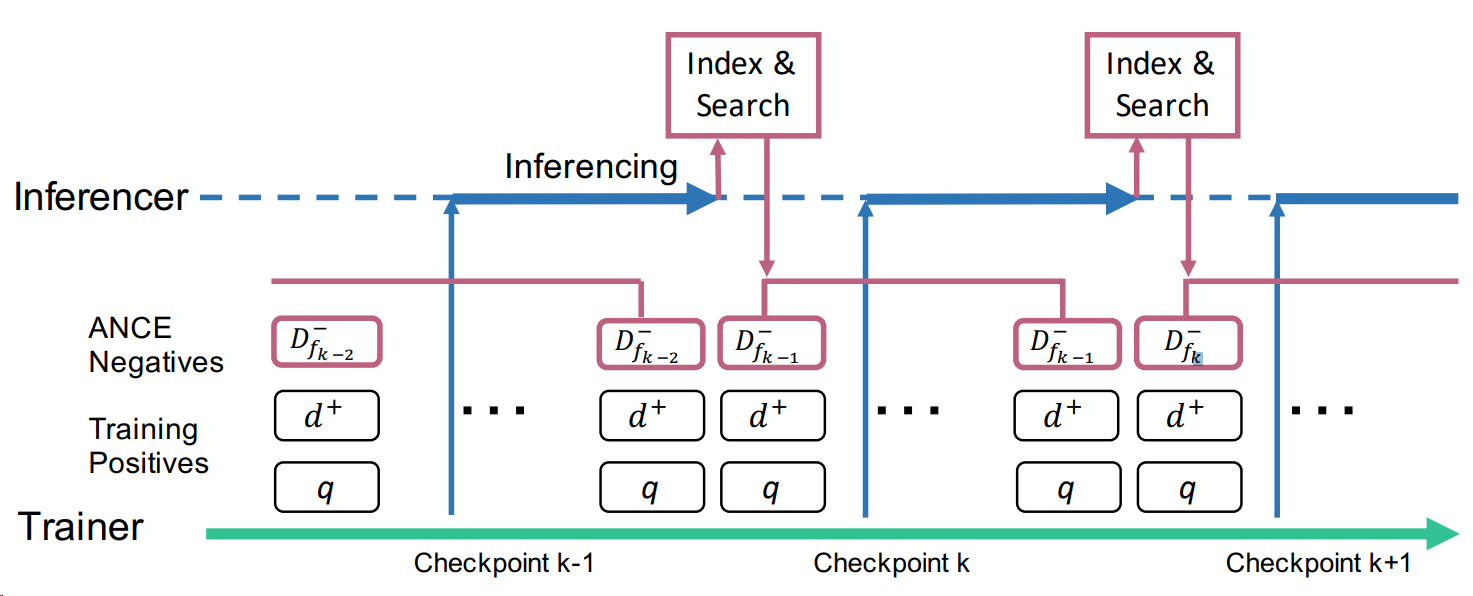

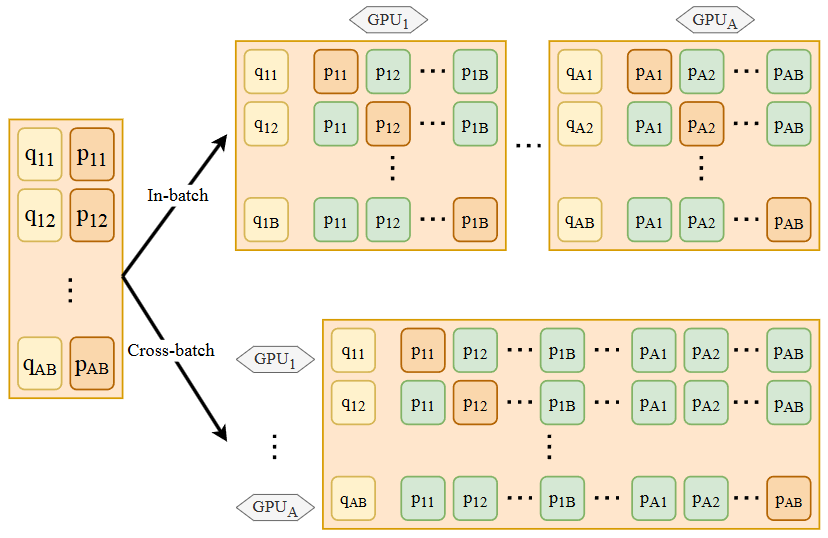

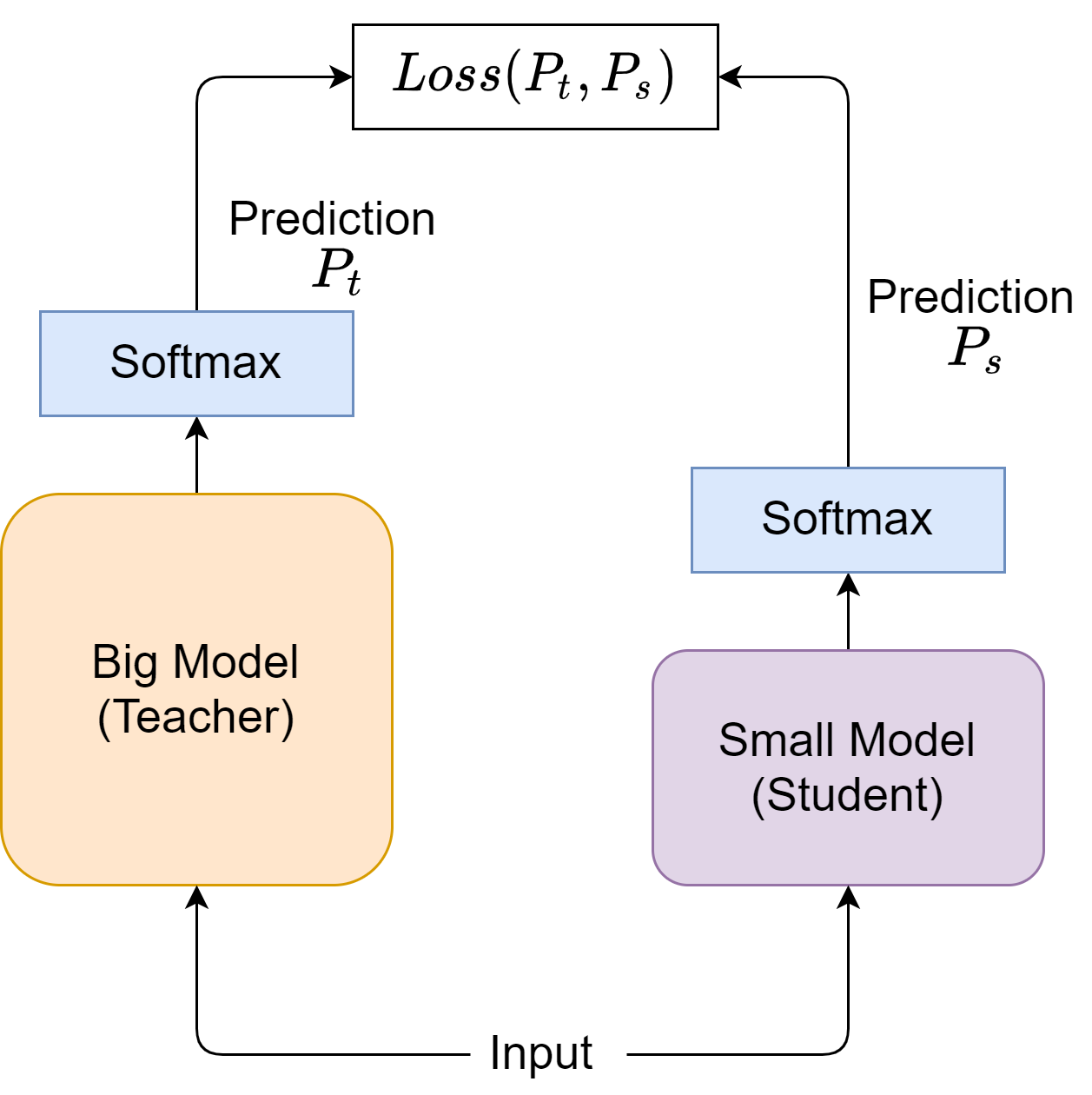

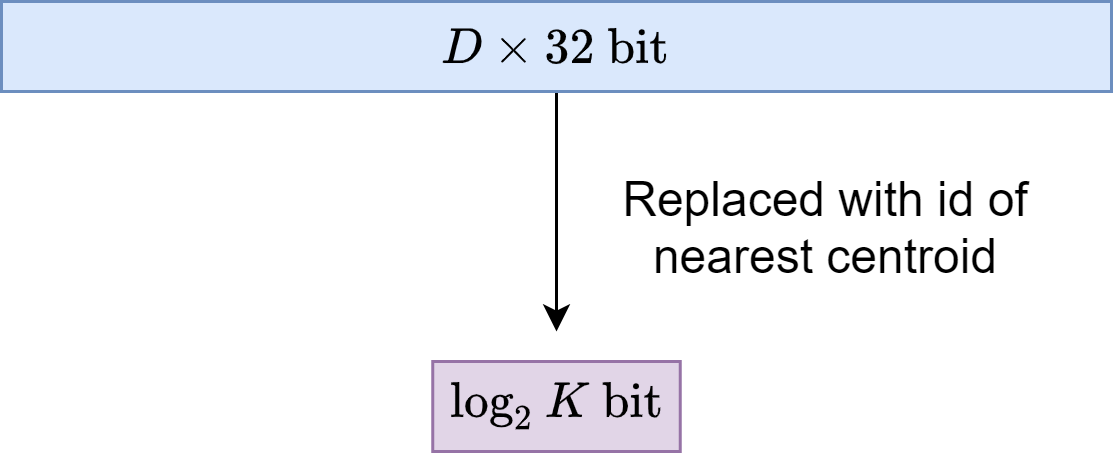

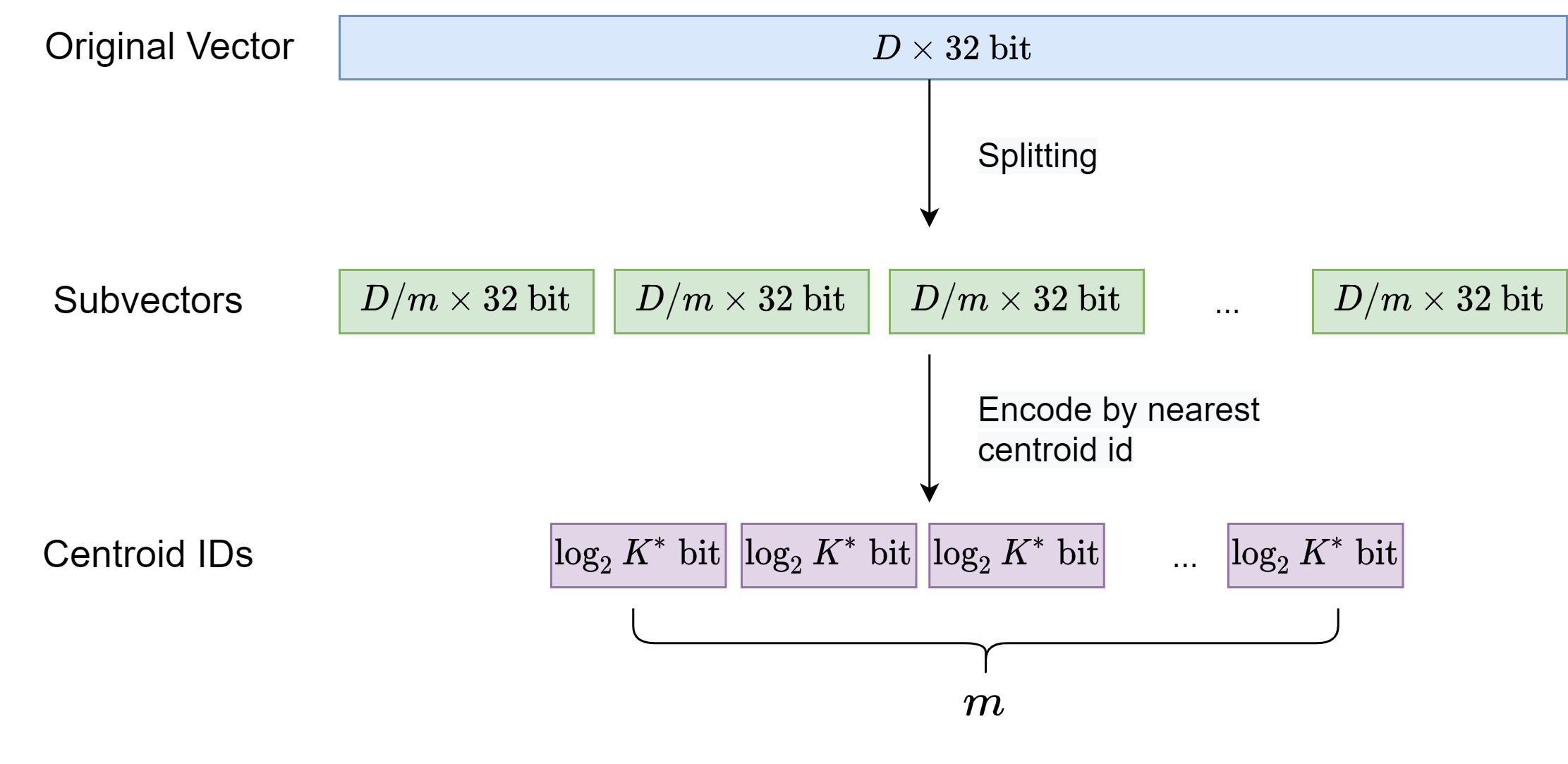

Since queries are topically similar, the formed in-batch negatives are harder examples than randomly formed in-batch negative, therefore delivering more information gain each batch.